TL;DR

- 4,000+ integration tests generated automatically using AI

- Test suite creation compressed from 2 weeks to 2 hours per API

- Multi-agent system applies formal methodologies: boundary analysis, equivalence partitioning, state transitions

- Best for: API testing at scale, coverage backlog reduction, QA automation

- Includes self-healing: tests auto-update when APIs change

AI test generation can create thousands of high-quality integration tests in hours, applying the same formal methodologies a senior QA engineer would use.

Airwallex had a coverage problem.

Their API surface was vast. Hundreds of endpoints. Thousands of edge cases. Writing tests for comprehensive coverage was a project that kept getting deprioritized.

“Everyone knew we needed more tests. No one had weeks to write them.”

The testing gap was a source of production incidents. Bugs that tests would have caught. Regressions that slipped through.

Then someone asked: what if we didn’t write the tests ourselves?

The Scale Challenge

Manual test writing doesn’t scale.

A developer might write 20 tests per day for a complex API. At that rate, comprehensive coverage for a large API surface takes months.

“We had APIs that had been in production for years with minimal test coverage. The backlog was overwhelming.”

The traditional approach — hire more QA, allocate more sprint time — hadn’t worked. The problem needed a different solution.

The Airtest Architecture

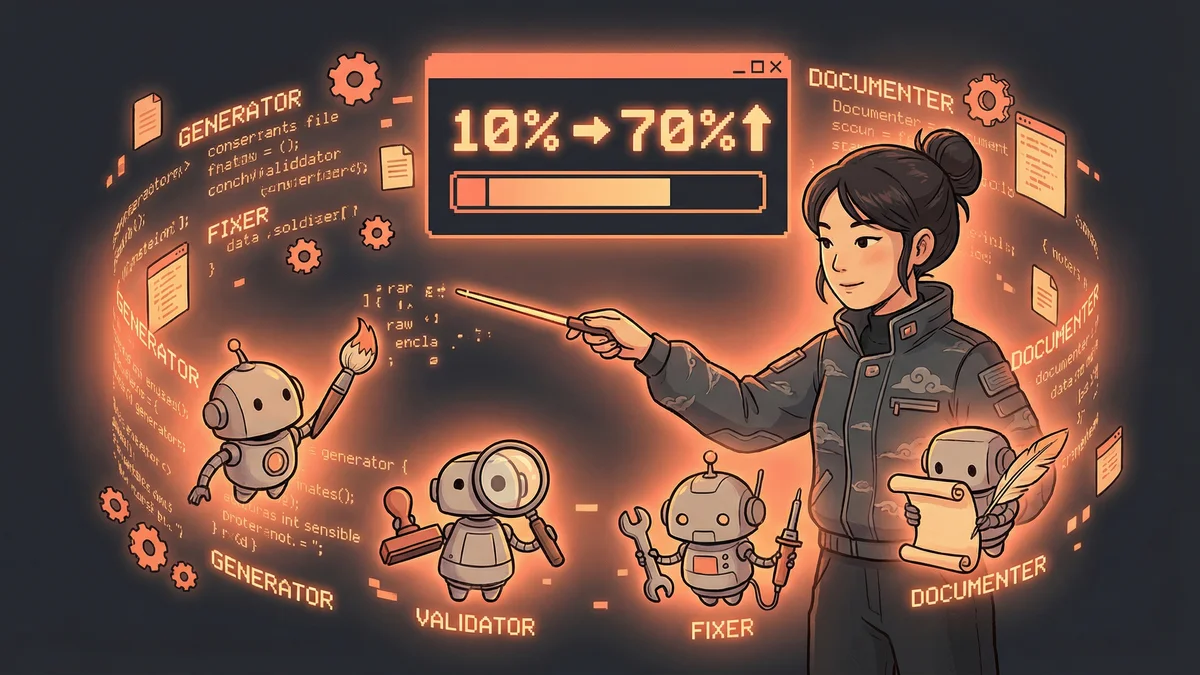

Airwallex built a multi-agent test generation platform.

Not a simple AI that generates random tests. A coordinated system with specialized agents:

Test Generation Specialists: Agents that understood testing methodologies. Equivalence Class Partitioning. Boundary Value Analysis. State Transition Testing.

Test Reviewer Agent: An agent that assessed generated tests for quality, coverage, and redundancy.

Test Debugging Agent: When tests failed, this agent diagnosed whether the test was wrong or the code was wrong.

Existing Tests Analysis Agent: An agent that understood what coverage already existed and identified gaps.

The agents worked together. Generation → Review → Debug → Gap Analysis → More Generation.

The Methodology Application

The test generation wasn’t random.

Agents applied formal testing methodologies:

Equivalence Class Partitioning: Divide inputs into classes where behavior should be identical. Test one value from each class.

Boundary Value Analysis: Test at the edges. Minimum values. Maximum values. Just below limits. Just above limits.

State Transition Testing: For APIs with state, test transitions between states. Valid transitions. Invalid transitions.

“The AI wasn’t just generating input/output pairs. It was applying the same methodologies a senior QA engineer would use.”

The Results

The numbers were dramatic.

4,000+ integration tests generated. Not toy tests. Real tests exercising real API behavior.

Two weeks → Two hours. What would have taken a team two weeks per API test suite took two hours of AI generation.

Coverage improvement: Previously undertested APIs now had comprehensive coverage.

The Quality Verification

Generating tests is easy. Generating good tests is hard.

The Test Reviewer Agent filtered output:

- Tests that were redundant with existing tests? Removed.

- Tests that couldn’t possibly fail? Removed.

- Tests that tested the wrong thing? Flagged for revision.

“We didn’t accept every test the generator produced. The review stage was as important as generation.”

Quality gating ensured the test suite grew with useful tests, not noise.

The Self-Healing Capability

Tests break. APIs change. Test data expires.

Airtest included self-healing capabilities:

When a test failed, the Debugging Agent analyzed why. If the test was outdated (the API had legitimately changed), it updated the test. If the test found a real bug, it flagged for human review.

“Tests that would normally require maintenance ran without human intervention. The system fixed its own breakage.”

The Gap Identification Loop

The Existing Tests Analysis Agent closed the loop.

After generation, it compared new tests against existing coverage. Identified areas still undertested. Fed that information back to generators.

“The system knew what it had tested and what it hadn’t. It could target gaps specifically.”

Coverage improved systematically, not randomly.

The Integration Point

Generated tests integrated into CI/CD normally.

They ran with the rest of the test suite. Failed tests blocked deployment. Coverage metrics included AI-generated tests.

“From the pipeline’s perspective, these were just tests. It didn’t matter who wrote them.”

The AI-generated tests had the same authority as human-written tests.

The Developer Experience

Developers interacted with Airtest through simple commands.

“Generate tests for this API.” The system analyzed the API, applied methodologies, produced tests, reviewed them, and delivered a test file.

“Find coverage gaps in this module.” The system analyzed existing tests, identified untested paths, and reported gaps.

“The interface was simple. The complexity was hidden.”

The Time Compression

Two weeks to two hours deserved unpacking.

The two-week estimate: a senior QA engineer studying an API, understanding edge cases, writing tests, reviewing tests, fixing tests, achieving coverage.

The two-hour reality: AI generation running in parallel, producing thousands of test cases, review agents filtering quality, output ready for integration.

“We compressed calendar time by parallelizing everything. Human QA is serial. AI is parallel.”

The Human Role

Humans didn’t disappear.

Architecture decisions: Which APIs to prioritize. What coverage targets to set. Which methodologies to emphasize.

Edge case specification: Unusual scenarios the AI might miss. Business logic that required domain knowledge.

Final review: Spot-checking generated tests. Verifying quality. Catching AI mistakes.

“Our QA engineers became QA architects. They designed test strategies. The AI executed them.”

The Cost Calculation

Test generation at scale had costs.

API usage for analysis and generation. Compute for running generated tests. Engineering time for setup and maintenance.

But compare to alternatives: months of developer time. Delayed releases waiting for test coverage. Production incidents from missing tests.

“The AI approach was dramatically cheaper than the human approach. Not marginally. Dramatically.”

The Cultural Shift

The test factory changed how Airwallex thought about testing.

“Before, tests were something we grudgingly wrote when we had time. After, tests were something we generated at will.”

The mental model shifted. Tests became cheap. Coverage became achievable. The backlog became clearable.

The Scaling Pattern

The pattern applied beyond their initial use case.

Other teams adopted similar approaches. Different APIs. Different coverage goals. Same underlying system.

“We built a capability, not a one-time project. The capability keeps generating value.”

The Quality Metrics

Generated tests caught real bugs.

“In the first month, AI-generated tests found twelve bugs that would have reached production. Twelve bugs that existing tests missed.”

The tests weren’t just covering code — they were finding problems. The coverage was meaningful.

The Maintenance Reality

Large test suites require maintenance.

Test data expires. APIs evolve. Dependencies change.

“The self-healing capability was critical. Without it, we’d have generated 4,000 tests and then spent months keeping them working.”

Automated maintenance meant the investment continued to pay dividends.

The Lessons Extracted

Airwallex documented learnings for other organizations:

Start with methodology. AI that understands testing theory produces better tests than AI that generates randomly.

Build review into the pipeline. Not every generated test is good. Quality filtering is essential.

Plan for maintenance. Tests need updating. Automate as much of that as possible.

Measure outcomes, not outputs. 4,000 tests means nothing if they don’t catch bugs. Measure what matters.

The Ongoing Evolution

The test factory continued improving.

New methodologies added. Better debugging logic. More sophisticated gap analysis.

“The system six months from now will be better than the system today. It’s designed to improve.”

The investment was in capability, not a fixed tool.