TL;DR

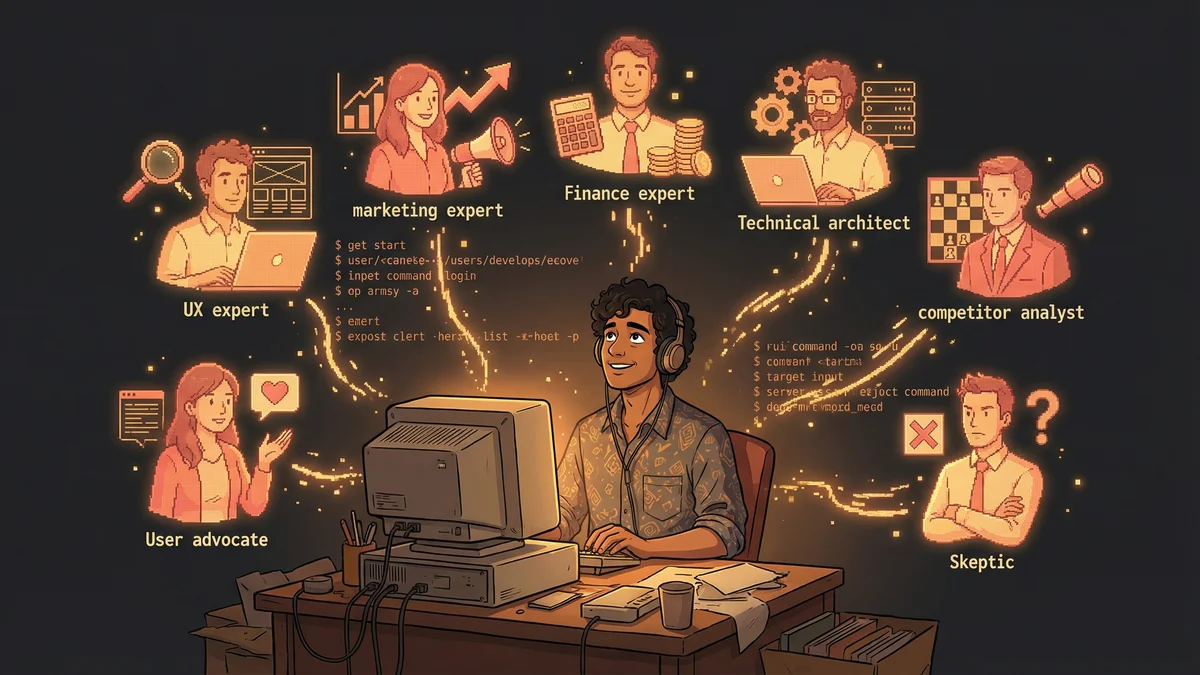

- Evaluated app idea with 7 AI specialist personas: UX, Marketing, Finance, Technical, Competitor, User Advocate, Skeptic

- Ran 35 research chains before making final decision, each chain spawning follow-up questions

- Used Claude Code with defined expert personas plus Synthesizer to resolve conflicts

- Best for: Solo founders making significant decisions without access to expensive human advisors

- Key lesson: 95% as good as real advisors at 5% of the cost — simulated expertise still provides systematic challenge

A founder assembled an AI board of directors with seven specialist personas, running 35 research chains to evaluate his app idea from every angle before committing.

Raj had an app idea. A good one, he thought. Maybe a great one.

But he’d been wrong before. Three previous startups. Two failures. One modest exit. Pattern recognition told him that his enthusiasm wasn’t a reliable signal.

“I needed outside perspectives. Real advisors charge thousands per hour. Investors want equity before they’ll think hard about your idea. Friends are too nice to be honest.”

He needed a board of directors. Without the board.

The Research Swarm

Raj started with research. Not casual Googling — systematic investigation.

He ran 15-20 targeted queries through Perplexity. Each one answered a specific question about his market, competitors, users, technology.

“Who are the existing players? What do users complain about? What’s the technology landscape? What are the regulatory concerns? Every angle I could think of.”

The queries returned raw material. Useful, but overwhelming. Facts without synthesis.

The Synthesis Layer

Raj fed all the research results to Claude Code.

“Here are 17 research summaries about the [X] market. Synthesize them into a comprehensive briefing document. Identify key insights, contradictions, and open questions.”

Claude processed the pile. Extracted themes. Noted where sources agreed and disagreed. Highlighted what remained unknown.

“I went from scattered facts to a coherent briefing. But I still didn’t know if my idea was good.”

The Board Assembly

Raj remembered the “four minds” pattern — multiple Claude instances with different perspectives. He wanted something more ambitious.

Not just four perspectives. A full advisory board with specialized expertise.

He designed seven specialist roles:

The UX Expert: “You are a veteran UX designer with 15 years of experience. You’ve seen hundreds of app launches. You focus on usability, user journeys, and common design mistakes.”

The Marketing Expert: “You are a growth marketing specialist. You’ve launched consumer apps and B2B products. You focus on acquisition channels, positioning, and go-to-market strategy.”

The Finance Expert: “You are a startup CFO. You’ve raised money and managed burn rates. You focus on unit economics, funding requirements, and financial viability.”

The Technical Architect: “You are a senior engineer who’s built products at scale. You focus on technical feasibility, architecture decisions, and development timelines.”

The Competitor Analyst: “You are a market intelligence specialist. You focus on competitive positioning, differentiation, and market dynamics.”

The User Advocate: “You represent the target user. You focus on whether the product actually solves a real problem worth paying for.”

The Skeptic: “You are a professional devil’s advocate. Your job is to find holes, identify risks, and stress-test assumptions.”

The Board Meeting

Raj ran a simulated board meeting.

Each specialist received the synthesized briefing and Raj’s idea description. Each was asked to evaluate the idea from their perspective.

The UX Expert focused on the user flow. “The core action is three taps. That’s good. But onboarding looks complex — 7 steps before the user sees value is too many.”

The Marketing Expert evaluated positioning. “The differentiation is unclear. When I look at competitors, I can’t see why a user would switch. You need a sharper hook.”

The Finance Expert ran numbers. “Your pricing assumption gives a $15 LTV. With projected CAC of $30, you’re underwater from day one. Either lower acquisition costs or increase LTV.”

The Technical Architect assessed feasibility. “The real-time features require WebSocket infrastructure. That’s achievable, but adds 6-8 weeks to MVP timeline.”

The Competitor Analyst mapped the landscape. “Three well-funded players already. But they’re all targeting enterprise. There might be a consumer niche they’ve ignored.”

The User Advocate questioned value. “Would I actually pay for this? Honestly, I’m not sure. The problem is real but mild. I might use a free solution instead.”

The Skeptic found holes. “You’re assuming users will input data manually. Every app that requires manual data entry faces adoption problems. Where’s your automation?”

The Conflict Resolution

The specialists disagreed on key points.

UX said simplify. Technical said simplification would remove core features. Marketing said differentiate. Finance said differentiation costs money you don’t have. User Advocate questioned demand. Competitor Analyst saw whitespace.

Raj added a Synthesizer role: “You’ve heard from seven experts. They don’t agree on everything. Identify where consensus exists, where key conflicts remain, and what additional information would resolve the conflicts.”

The Synthesizer produced a unified assessment:

Consensus: The technical approach is feasible but timeline is aggressive. Onboarding needs simplification.

Key conflicts: Demand validation is the central uncertainty. Marketing sees opportunity; User Advocate has doubts.

Resolution path: User research with 20-30 potential users would resolve the demand question before significant development investment.

The Action Items

The board meeting produced actionable next steps:

- Conduct user validation interviews (addresses demand uncertainty)

- Redesign onboarding to 3 steps max (consensus item)

- Develop differentiation messaging (marketing requirement)

- Re-run financial model with realistic CAC (finance requirement)

- Scope technical MVP with explicit timeline (architecture requirement)

“I went from ‘I think this is a good idea’ to ‘here are the five things I need to validate.’ Much more useful.”

The Iteration Cycle

The board wasn’t one meeting. It was an ongoing advisory relationship.

Raj completed user validation interviews. Fed the results back to the board. Asked for updated assessments.

User Advocate’s tone changed: “The interview data suggests stronger demand than I expected. 7 of 10 subjects described the problem as ‘frustrating’ or ‘time-consuming.’ Willingness to pay appeared in 5 conversations.”

Finance Expert updated: “With 50% conversion at $10/month, your unit economics work. The user data supports that conversion might be achievable.”

“The board evolved its opinion as I brought new evidence. Just like a real board would.”

The Research Chains

Some board questions required more research.

Marketing Expert: “I’d like to understand the acquisition channels better. What’s the CAC on Instagram vs. Google vs. content marketing for similar apps?”

Raj ran another research cycle. 10 queries on acquisition costs for consumer apps. Fed results to Marketing Expert. Got refined recommendations.

“Each board question could spawn a research chain. The process was deep, not shallow.”

The 35-Chain Decision

By the time Raj felt ready to commit, he’d run 35 research chains.

Each chain: targeted queries → synthesis → board evaluation → follow-up questions → more research.

“I’d explored every angle I could think of. The board had challenged every assumption. What remained wasn’t certainty — but informed confidence.”

He knew the risks. He knew the unknowns. He knew what could go wrong and how to monitor for it.

The Comparison to Human Boards

Raj had been on real advisory boards. The AI version was different.

Faster: A human board meets quarterly. The AI board meets on demand.

Cheaper: Human advisors cost real money. AI advisors cost tokens.

More comprehensive: Seven specialists evaluating simultaneously. Getting seven humans aligned is practically impossible.

Less networked: Human advisors provide introductions. AI advisors don’t.

Less experienced: Real experts have pattern recognition from actual experience. AI specialists simulate expertise.

“The AI board wasn’t as good as an actual advisory board with domain experts. But it was 95% as good at 5% of the cost. For a solo founder, that math works.”

The Bias Acknowledgment

The board had limitations.

All seven specialists were Claude in different personas. The underlying model was the same. True diversity of thought was bounded.

“I couldn’t simulate a 60-year-old investor’s gut feeling or a 25-year-old user’s social dynamics intuition. Those came from life experience the model doesn’t have.”

For certain questions, Raj still sought human input. The AI board handled systematic analysis. Humans handled judgment calls that required lived experience.

The Pattern Library

Raj documented board configurations for different decision types:

Product decisions: UX + Technical + User Advocate + Skeptic Market decisions: Marketing + Competitor + Finance + Skeptic Strategy decisions: All seven specialists Quick checks: User Advocate + Skeptic only (faster, cheaper)

Different decisions warranted different board compositions. The pattern became reusable.

The Current Practice

Two years later, Raj still convened AI boards.

Feature prioritization. Pricing changes. Market expansion. Any significant decision got specialist evaluation.

“I don’t make major decisions alone anymore. I make them with a board that I can convene in minutes.”

The app had launched. It was working. The board had helped him avoid multiple mistakes he would have made without its systematic challenge.

The Recommendation

For others building specialist boards:

“Define the specialists based on what expertise your decision actually needs. Don’t default to generic roles.”

“Give each specialist the same information. Don’t bias outcomes by selective briefing.”

“Include a Skeptic and a Synthesizer in every board. The Skeptic finds holes. The Synthesizer resolves conflicts.”

“Let board meetings spawn research chains. Follow-up questions are where the real insights emerge.”

“Compare AI board advice to your gut feeling. Where they diverge, investigate. Sometimes your gut is right. Sometimes the board caught something.”

The Philosophy

Raj’s view on AI advisory boards:

“The board doesn’t replace human judgment. It supplements it. It asks questions you forgot to ask. It challenges assumptions you didn’t know you had.”

“Seven specialists thinking hard about your decision for an hour is more scrutiny than most decisions ever receive. Even simulated specialists provide value.”

“The goal isn’t to remove doubt. It’s to ensure you’ve done due diligence. Confident decisions come from systematic evaluation, not wishful thinking.”