TL;DR

- Multi-agent systems achieve 90% better results than single agents on complex research tasks

- Found 95%+ of target data vs 40% with single agent approach

- Uses orchestrator-worker pattern: Claude Opus 4 leads, Claude Sonnet workers execute in parallel

- Best for: broad research, competitive intelligence, due diligence, market surveys

- Trade-off: 15× token cost, but dramatically more thorough results

Multi-agent AI research systems can find 95% of target information where single agents find only 40%—the key is parallel execution with coordinated planning.

The research question seemed simple.

“List all board members of companies in the S&P 500 Information Technology sector.”

A single AI agent tried. It searched. It found some companies. It looked up board members. Eventually, it got confused, lost track, and returned incomplete results.

The question required finding 70+ companies, then finding 8-12 board members each. Hundreds of lookups. Too much for one agent to track.

Anthropic’s engineers wondered: what if you threw more agents at the problem?

The answer surprised them.

The Breakthrough

Multiple agents working in parallel achieved 90% better results than a single agent on broad research tasks.

Not 10% better. Not 50% better. Ninety percent.

“We expected improvement. We didn’t expect this much.”

The architecture was simple: a lead agent that planned, and worker agents that executed. But the results were transformative.

The Architecture

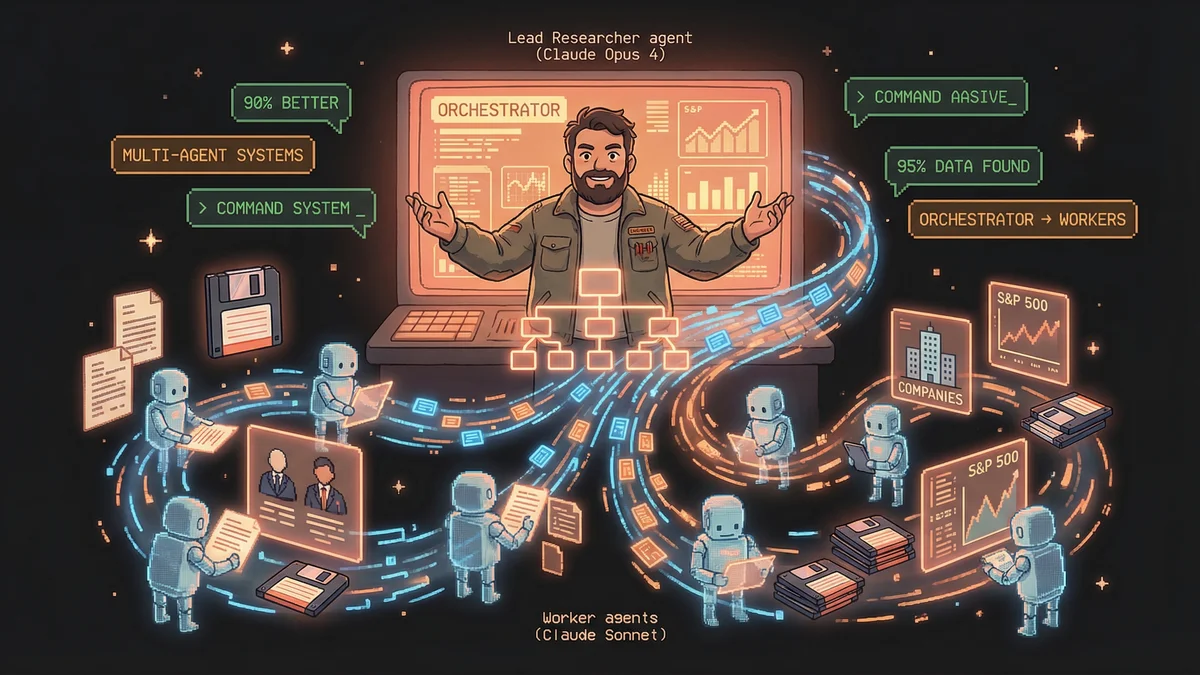

The system used an orchestrator-worker pattern.

Lead Researcher (Claude Opus 4): Plans the high-level approach. Breaks the query into subtasks. Coordinates worker agents. Synthesizes final results.

Worker Subagents (Claude Sonnet): Execute specific subtasks in parallel. Each has its own context window. Each can search the web, access documents, gather information.

Citation Agent: Verifies sources. Ensures every claim in the final answer has a citation.

“The lead agent thinks strategically. The worker agents think tactically. Together, they cover more ground than any single agent could.”

The Workflow

Here’s how a complex query flows through the system:

1. Query Analysis: The lead agent receives the question. It analyzes what’s needed. For the S&P 500 query: identify IT sector companies, then find board members for each.

2. Plan & Spawn: The lead creates subtasks. For large queries, it spawns multiple worker agents, each assigned a piece. “Agent A: find IT companies list. Agent B: board members for companies A-M. Agent C: board members for companies N-Z.”

3. Parallel Execution: Worker agents run simultaneously. Each searches independently. They don’t coordinate directly — they just execute their assigned task.

4. Result Collection: Workers return their findings. “Company X: board members are A, B, C. Source: [link].” Raw data flows back to the lead.

5. Gap Analysis: The lead checks for completeness. Missing companies? Missing information? It can spawn additional workers to fill gaps.

6. Synthesis: The lead assembles a coherent answer from all worker outputs. Organizes, formats, ensures consistency.

7. Citation Verification: The citation agent reviews the assembled answer. For each factual claim, it verifies the source. Adds citations. Flags unsupported claims.

8. Final Answer: The user receives a comprehensive, cited response.

The Token Economics

Multi-agent systems use more resources.

Each worker agent consumes tokens independently. For complex queries, the system might use 15× the tokens of a simple single-agent query.

“This isn’t cheap. But for queries where thoroughness matters, the cost is justified.”

The trade-off: expensive per query, but dramatically more effective. The right tool for the right job.

The Parallelism Advantage

Why does this work so well?

Context window multiplication: A single agent has one context window — maybe 200k tokens. Five agents have five context windows. More working memory for complex problems.

Breadth coverage: One agent searching sequentially might explore 20 leads. Five agents in parallel might explore 100.

Failure isolation: If one worker agent goes wrong, it doesn’t corrupt the others. The lead can recognize bad results and request corrections.

“It’s like having a research team instead of a single researcher. More eyes, more angles, more coverage.”

The Success Measurement

The team measured success on complex queries.

Single agent: found about 40% of board members across 70% of companies. Incomplete. Many gaps.

Multi-agent: found 95%+ of board members across 98% of companies. Near-complete. Few gaps.

“For broad, information-gathering queries, multi-agent is dramatically better. Not marginally. Dramatically.”

The Limitations

Multi-agent isn’t universally better.

Simple queries: For “what’s the capital of France?” multi-agent is overkill. Expensive without benefit.

Highly sequential tasks: When each step depends on the previous (certain types of coding, linear reasoning chains), parallelism helps less.

Tight coordination needs: If agents need constant real-time coordination, the architecture struggles. They work best on independent subtasks.

“Multi-agent is a power tool. You use it for problems that need power.”

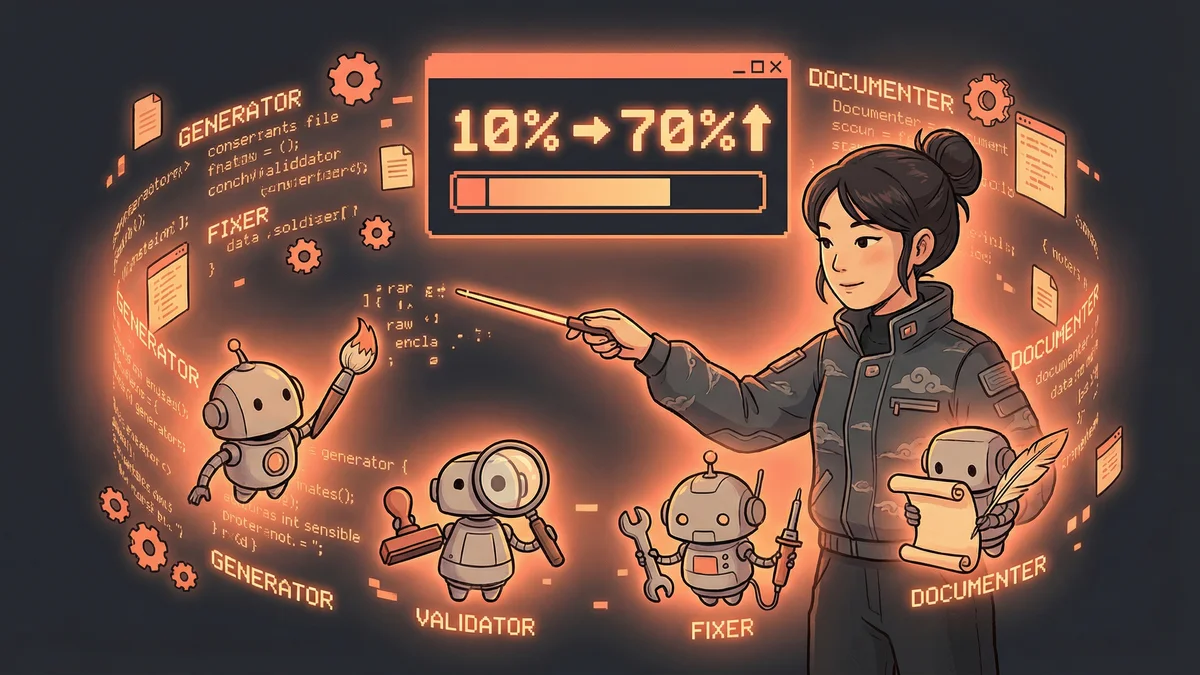

The Interleaved Thinking

The workers don’t just execute blindly.

They use “interleaved thinking” — reflecting on tool results as they get them. When a search returns data, the agent analyzes it before the next step.

“Am I finding what I need? Should I refine my search? Is this source reliable?”

The reflection prevents mechanical execution. Workers make judgment calls about their own progress.

The Citation Innovation

The citation agent was a breakthrough.

Research is only valuable if verified. Every claim needs a source. Manual citation is tedious.

“We built a specialist agent whose only job is verification. It reads the answer, identifies claims, and confirms each one against sources.”

The result: answers you can trust. Not “Claude says X” but “X according to [source].”

The Memory Management

Complex research generates lots of data.

The lead agent maintains a persistent memory — a structured record of what’s been found, what’s pending, what’s failed.

“The memory is the coordination mechanism. Workers write to it. The lead reads from it. It’s the shared state that keeps everyone aligned.”

Without persistent memory, the system would lose track as context windows filled. With it, arbitrary complexity becomes manageable.

The Real-World Applications

Where does this architecture shine?

Competitive intelligence: Gather information about multiple competitors simultaneously. Each worker researches one competitor.

Due diligence: Investigate a company before acquisition. Multiple aspects researched in parallel: financials, legal, reputation, technology.

Market research: Survey an entire industry. Each worker covers a segment.

Academic literature review: Find and synthesize papers on a topic. Workers search different databases, lead integrates findings.

“Any question where breadth matters. Where one perspective isn’t enough.”

The Build vs. Use Decision

Should you build your own multi-agent system?

“For most users, no. Use the multi-agent capabilities built into products like Claude’s research feature.”

Building multi-agent orchestration is complex. Context management, error handling, result synthesis — engineering challenges that teams spent months solving.

“Use the patterns. Don’t rebuild the infrastructure.”

The Future Trajectory

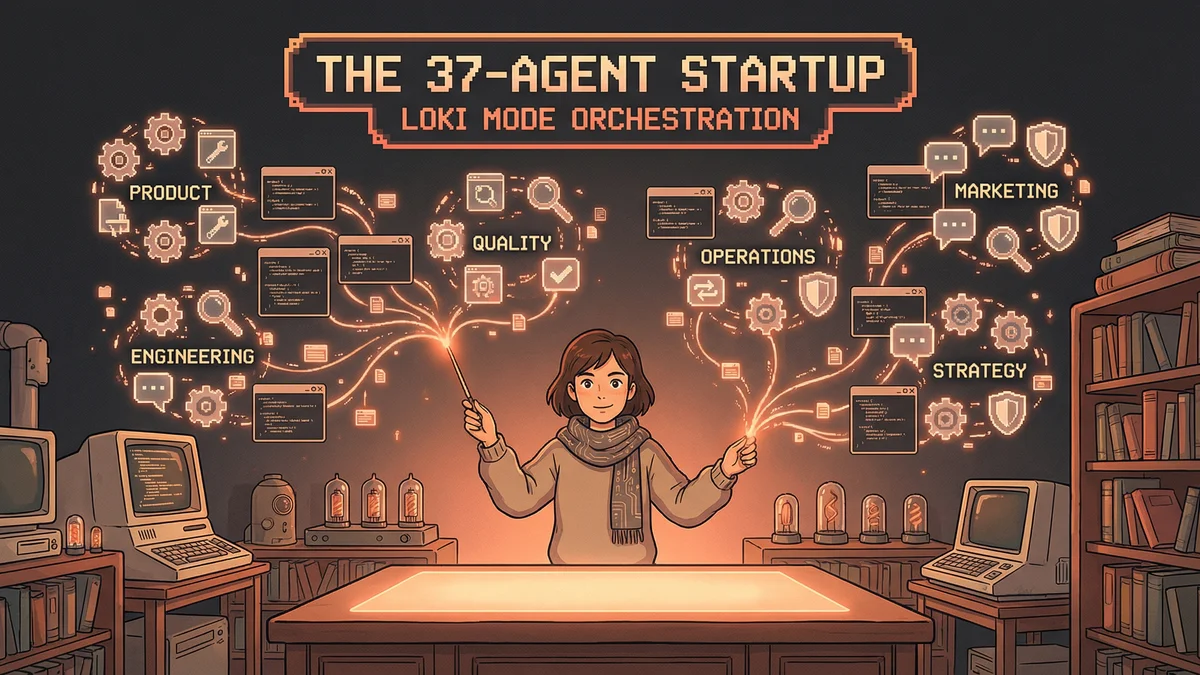

The team saw multi-agent as the future of complex AI tasks.

“Single-agent AI hit a ceiling. No matter how smart the model, one context window has limits. Multiple agents break through.”

The architecture scales. Ten agents. Fifty agents. Specialist agents for different domains. The pattern extends.

“We’re at the beginning of multi-agent AI. The current systems are like early databases — powerful but primitive. What’s coming will be much more sophisticated.”

The Coordination Problem

More agents isn’t always better.

“Beyond a certain point, coordination overhead exceeds benefits. Managing fifty agents is harder than managing five.”

The sweet spot depends on the task. Some queries need two agents. Some need twenty. The lead agent learns to gauge appropriate scale.

“Too few agents: incomplete results. Too many agents: wasted resources. Finding the right number is part of the intelligence.”

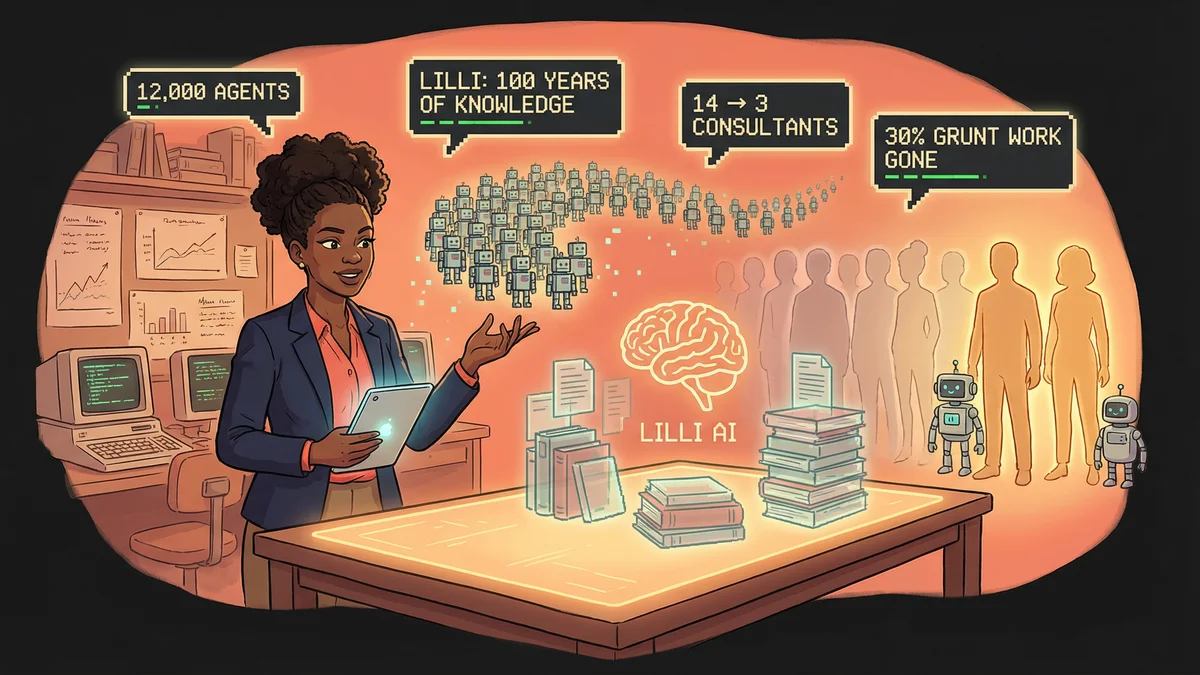

The Enterprise Implications

For organizations, multi-agent changes what’s possible.

Questions that were “too expensive to answer” — because they’d require days of human research — become answerable in minutes.

“Every organization has questions they’ve never asked because the cost of answering was too high. Multi-agent drops that cost dramatically.”

The strategic implications are significant. Better information. Faster. About anything.

The Current State

The multi-agent research system is live in Claude products.

When you ask a complex research question, you might be talking to an army.

“Users don’t see the orchestration. They see the answer. But behind simple interfaces, complex coordination is happening.”

The ninety percent improvement isn’t theoretical. It’s operational. Working. Now.