TL;DR

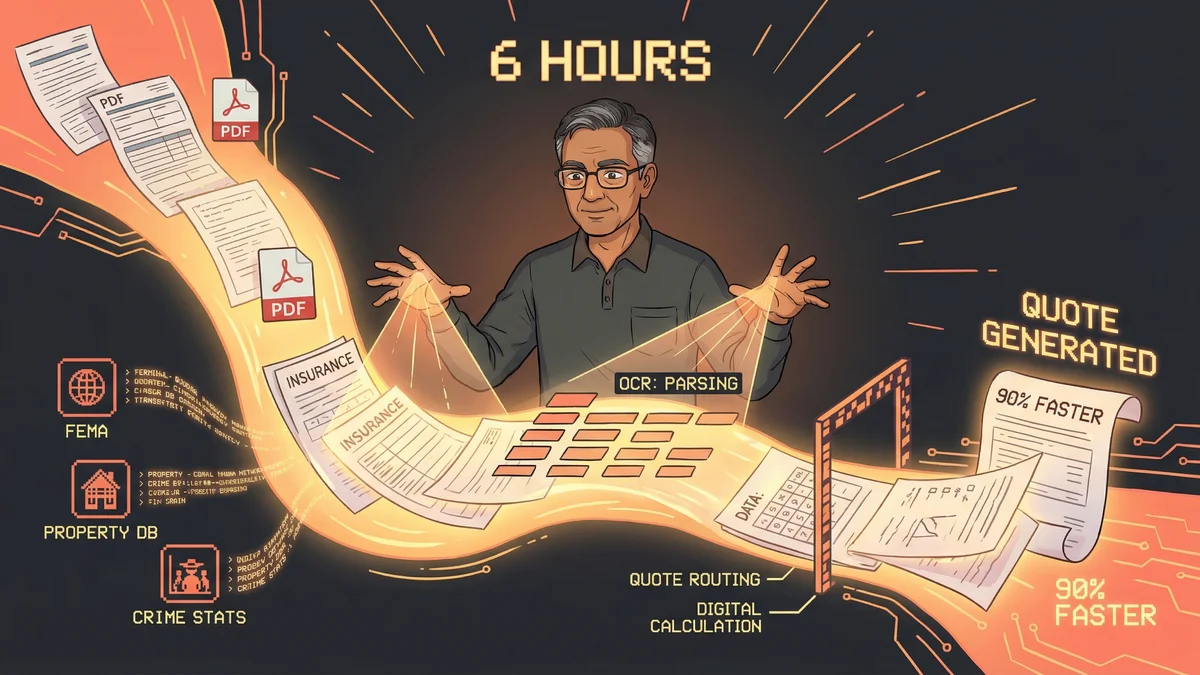

- Insurtech expert built working underwriting prototype in 6 hours with Claude Code

- Prototype handles OCR document parsing, API data enrichment, rating calculations, and quote generation

- Potential 90% reduction in underwriting time compared to traditional manual workflows

- Best for: insurance companies wanting to automate document-heavy underwriting processes

- Key insight: integrated AI workflows beat siloed tools requiring complex integration

A twenty-year insurtech veteran built a complete insurance underwriting prototype in just six hours — a task that would normally take teams six months to even scope.

Nauman had spent twenty years in insurance technology.

He knew exactly what an underwriting workflow looked like: receive application, parse documents, gather external data, calculate risk, generate quote. A process that normally involved multiple systems, manual data entry, and hours of human work per application.

“I wanted to see if Claude could replicate what an underwriter does. Not write code about insurance — actually underwrite.”

Six hours later, he had a working prototype.

The Underwriting Challenge

Insurance underwriting is complex because it combines multiple skills:

Document understanding: Application forms come in various formats. Typed. Handwritten. PDFs. Images. The system must extract structured data from unstructured documents.

External enrichment: The application data alone isn’t enough. You need external signals: flood zones, crime statistics, property details, historical claims data.

Risk calculation: All that data feeds into rating algorithms. Different factors, different weights, different outcomes.

Quote generation: The final step: a human-readable quote showing coverage options and pricing.

“Traditional underwriting systems take years to build. I wanted to see what one afternoon could produce.”

Phase One: Document Extraction

Nauman started with the hardest part: reading applications.

He gathered sample insurance application PDFs. Some were digital forms with clear fields. Others were scanned documents with handwritten entries.

“I told Claude: parse these PDFs. Extract key underwriting fields: business type, property address, coverage limits requested, years in operation, prior claims history.”

Claude used OCR capabilities to convert documents to text, then reasoned about the structure to identify field locations and extract values.

Twenty minutes later: structured data from five sample applications. Fields that would have required manual data entry were extracted automatically.

“The handwriting recognition wasn’t perfect. About 85% accurate. But that’s better than many commercial OCR solutions.”

Phase Two: Risk Enrichment

Application data tells you what the customer wants. External data tells you what the risk actually is.

Nauman configured Claude to call external APIs:

FEMA flood zone data: Given a property address, retrieve the flood zone designation. Zone A? High risk. Zone X? Lower risk.

FBI crime statistics: By ZIP code, retrieve property crime rates, violent crime rates. Higher crime means higher risk for certain coverages.

Property data services: Square footage, construction type, year built. All factors in determining replacement cost and risk profile.

“Claude made the API calls, parsed the responses, and enriched each application with external data. What would take an underwriter thirty minutes of lookup work, Claude did in thirty seconds.”

The enrichment phase took another thirty minutes. Five applications now had complete risk profiles.

Phase Three: Rating Logic

With data in hand, Claude implemented rating algorithms.

Insurance rating is essentially: base rate × modifiers. The base rate depends on coverage type. The modifiers reflect risk factors.

“I gave Claude our rating logic: GL base rate by industry class, property rate by construction type, modifiers for location, claims history, years in business.”

Claude built functions that took the enriched application data and calculated premiums. Different coverage lines — General Liability, Property, Professional Liability — each with their own logic.

“Where APIs existed for certain rating tables, Claude called them. Where they didn’t exist, I had Claude use state-specific static tables I provided.”

The fallback handling was important. Real underwriting systems must work even when data sources are unavailable.

Phase Four: Quote Generation

The final step: producing something a human could review.

Claude generated quote summaries: coverage options, premium breakdowns, risk scores, key factors that influenced pricing.

“The output wasn’t pretty. No formatting, no branding. But the numbers were there. Coverage limits, premiums by line, total annual cost.”

A quote that would normally take an underwriter two hours to produce — gathering data, running calculations, documenting the analysis — generated in minutes.

The Compound Effect

Nauman reflected on what made this work.

“Each capability alone — OCR, API calls, calculations, document generation — isn’t revolutionary. The compound effect is what matters.”

In traditional systems, these capabilities live in different tools. OCR in one system, APIs in another, rating in a third, document generation somewhere else. Integration is the hard part.

“Claude did all of it in one session. No integration layer. No data handoffs. The same context that extracted the application data also called the APIs, ran the calculations, and generated the quote.”

The integrated workflow was the breakthrough, not any individual capability.

The Validation Phase

After the initial build, Nauman spent two hours testing edge cases.

What if the flood zone API returned no data? Claude needed fallback logic.

What if the application was missing required fields? Claude needed to flag incomplete submissions.

What if the handwriting was illegible? Claude needed confidence scores and human review triggers.

“Real underwriting has a thousand edge cases. I handled maybe fifty in my testing. But the framework was there to add more.”

The Production Gap

Nauman was honest about limitations.

“This prototype isn’t production-ready. No compliance review, no audit trail, no approval workflows. You couldn’t deploy this tomorrow.”

The gap between prototype and production was significant. Real insurance systems need regulatory compliance, data security, integration with policy administration systems, user interfaces for underwriters.

“But as a proof of concept — showing that AI can actually underwrite, not just assist underwriting — it worked.”

The Efficiency Calculation

Nauman did the math on the prototype’s efficiency.

Traditional underwriting time for five applications: 8-10 hours of human work across document review, data lookup, rating, quote preparation.

Prototype time: 6 hours of Nauman guiding Claude, plus about 30 minutes of automated processing per application.

“If the prototype scaled — and that’s a big if — we’re talking 90% reduction in underwriting time. Not incremental improvement. Order of magnitude.”

The Expansion Path

Nauman outlined what it would take to go from prototype to product.

More coverage lines: The prototype handled General Liability and Property. Real commercial insurance includes Workers’ Comp, Professional Liability, Umbrella, and more.

Better document handling: More form formats, better OCR accuracy, handling of supplemental documents like loss runs and certificates.

Integration: Connecting to policy administration systems, payment processing, agency management tools.

Compliance: State-specific rating rules, regulatory filing requirements, audit capabilities.

“Each of these is a project in itself. But the core underwriting logic — the part that’s hardest to build from scratch — is proven.”

The Broader Implication

Nauman saw the insurance application as representative of a class of problems.

“Insurance underwriting is: read documents, gather data, apply rules, generate output. That pattern appears everywhere.”

Loan underwriting. Claims processing. Medical prior authorizations. Legal document review. Any workflow that combines document understanding with external data and rule application.

“If Claude can underwrite insurance, it can handle similar workflows in other industries. The tools are transferable.”

The Human Role

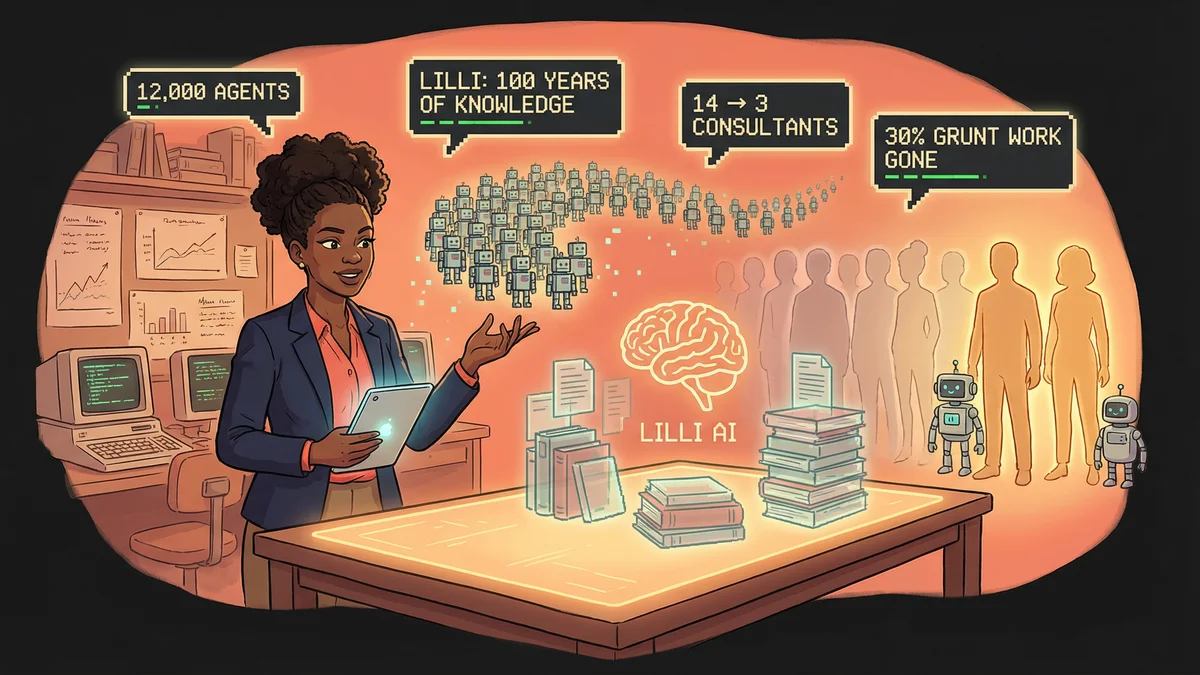

The prototype didn’t eliminate humans. It changed what they did.

“An underwriter using this system wouldn’t do data entry or API lookups. They’d review Claude’s analysis, verify edge cases, make judgment calls on ambiguous situations.”

The work shifted from mechanical to cognitive. Less time typing, more time thinking.

“That’s probably the future of knowledge work. AI handles the routine. Humans handle the exceptions and the judgment.”

The Final Assessment

Six hours. One afternoon. A working prototype of what normally takes teams years to build.

“Was it perfect? No. Was it production-ready? No. Did it demonstrate something real? Absolutely.”

The demonstration proved that integrated AI workflows could handle complex business processes end-to-end. Not in theory — in practice, with real documents and real calculations.

“I’ve seen enough enterprise software projects to know how long this usually takes. What Claude did in six hours would normally be a six-month project to even scope.”

The gap between prototype and production remained. But the prototype existed. The concept was proven. The path forward was visible.