TL;DR

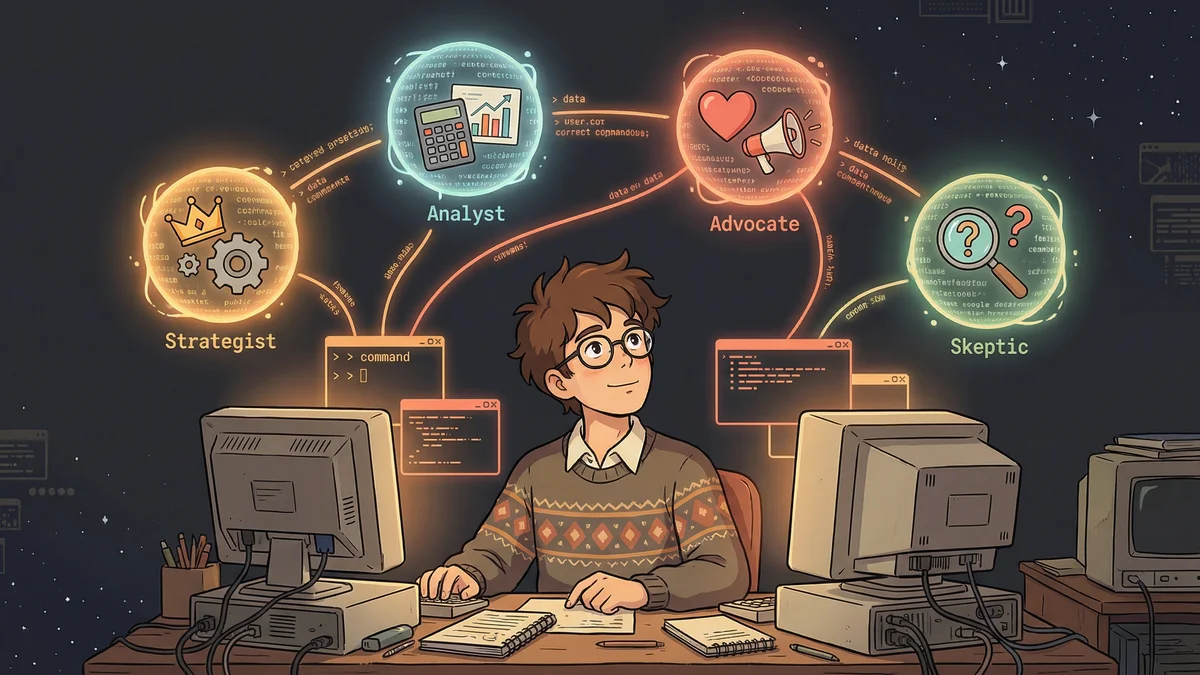

- Running multiple Claude Code instances with different “roles” (Strategist, Analyst, Advocate, Skeptic) produces dramatically different perspectives on the same problem

- A fifth “Synthesizer” instance integrates conflicting viewpoints into actionable recommendations

- Best for: Complex strategic decisions like pricing, product design, or communication strategy where multiple perspectives add value

- Cost consideration: Four perspectives use ~4x the tokens—reserve for important decisions, not daily tasks

- Key insight: The process trains your own thinking; you internalize the perspectives for future solo decisions

Multiple Claude Code instances running in parallel can simulate a diverse advisory board, with each AI “mind” specializing in strategic, analytical, empathetic, or skeptical viewpoints.

Derek had a problem too complex for one perspective.

He was redesigning his company’s pricing model. Every approach he considered had tradeoffs. Raise prices and risk losing customers. Lower prices and hurt margins. Bundle services and add complexity. Unbundle and fragment the offering.

“I needed different viewpoints. In a normal company, you’d get people from sales, finance, product, and strategy in a room. But I’m a solopreneur. The room has one person: me.”

He’d tried asking Claude for different perspectives by prompting “think like a CFO” or “consider the customer’s view.” It helped, but felt artificial. The AI still had one voice.

Then he discovered you could run multiple Claude Code instances simultaneously.

The Multi-Agent Setup

Derek opened four terminal windows. Each ran Claude Code.

He gave each instance a different role:

Instance 1 — The Strategist: “You are a business strategist. Think in terms of market positioning, competitive dynamics, and long-term brand building. Be ambitious.”

Instance 2 — The Analyst: “You are a financial analyst. Think in terms of margins, unit economics, and cash flow impact. Be conservative with numbers.”

Instance 3 — The Advocate: “You are a customer advocate. Think about how pricing changes affect customer perception, loyalty, and word-of-mouth. Be empathetic.”

Instance 4 — The Skeptic: “You are a professional skeptic. Your job is to find holes in any proposal. Be thorough in identifying risks.”

Same pricing problem, four different lenses.

The First Round

Derek posed the same question to all four:

“I’m considering raising prices 15% for new customers while keeping existing customers at current rates. Evaluate this approach.”

The responses differed dramatically.

Strategist: “Good move. Price increases signal value. Grandfathering existing customers shows loyalty. You’re building premium positioning.”

Analyst: “The math works. 15% increase on new customers at current acquisition rate adds $47K annually. Watch churn metrics closely.”

Advocate: “Risky. Existing customers will learn about the two-tier pricing. They’ll feel they’re getting a worse deal, even though their price didn’t change. Resentment builds.”

Skeptic: “What happens when existing customers leave and return? Do they get old pricing? New pricing? Either choice creates problems. And what about referrals — do friends of customers get special treatment?”

“The Skeptic found edge cases I hadn’t considered. The Advocate predicted an emotional response I’d dismissed. Having four viewpoints in parallel was powerful.”

The Synthesis Challenge

Four perspectives created a new problem: integration.

Each AI made valid points. Some conflicted. Derek needed to synthesize them into a decision.

He created a fifth role: The Synthesizer.

“You’re a decision integrator. You’ll receive analyses from four different perspectives. Your job is to identify: (1) where they agree, (2) where they conflict, (3) what additional information would resolve conflicts, (4) a recommended path forward.”

He fed all four analyses to the Synthesizer. It produced a unified view highlighting the tradeoffs and suggesting a modified approach that addressed the Skeptic’s concerns.

The Iterative Process

The experiment became a process.

Round 1: Pose initial question to all four perspectives Round 2: Synthesize responses, identify gaps Round 3: Follow-up questions to specific roles Round 4: Final synthesis and recommendation

Each round refined the thinking. The pricing decision evolved from “raise 15%” to a more nuanced model with customer tier definitions, referral handling, and communication strategy.

“I wouldn’t have gotten there alone. Each perspective pushed the idea in directions I couldn’t have imagined myself.”

The Cognitive Division

Derek realized he’d created something like a board of advisors.

Each AI “mind” specialized in certain concerns. The Strategist thought big-picture. The Analyst watched the numbers. The Advocate kept humans centered. The Skeptic stress-tested everything.

“In a company, these would be different people with different training and incentives. I simulated that diversity with different prompts to the same underlying AI.”

The limitation: the same model underpinned all instances. True diversity of thought was bounded by Claude’s training. But the structural separation still helped.

The Hierarchy Version

Derek experimented with hierarchy.

Instead of parallel equals, he created layers:

Level 1 — Workers: Three instances doing research, analysis, drafting Level 2 — Reviewer: One instance reviewing worker outputs, requesting revisions Level 3 — Decider: Derek himself, making final calls based on reviewed work

The hierarchy channeled outputs upward. Lower levels did detailed work. Higher levels evaluated and directed.

“It mimicked how organizations actually function. Specialization at the bottom, integration at the top.”

The Orchestration Challenge

Managing multiple AI instances required coordination.

Derek created a simple system: each instance wrote outputs to a shared folder. Higher-level instances read from that folder. The folder became a communication channel.

More sophisticated users built automation: scripts that triggered instance runs in sequence, passed outputs between them, compiled final reports.

“The tooling is primitive right now. In the future, there’ll probably be proper orchestration systems. For now, it’s manual but still valuable.”

The Token Math

Multiple instances multiplied costs.

Four perspectives on one question used roughly four times the tokens. Complex problems with multiple rounds added up.

“I saved it for important decisions. Pricing strategy? Worth the cost. Choosing an email subject line? Overkill.”

The multi-agent approach was a heavy tool. Appropriate for strategic questions, not daily tasks.

The Unexpected Benefits

Beyond better answers, the process changed how Derek thought.

“I internalized the perspectives. When considering a decision alone now, I automatically think: what would the Skeptic say? What would the Advocate flag?”

The AI exercise trained his own cognition. The four perspectives became mental models he could apply without running instances.

The Pattern Library

Derek documented patterns for different situations:

Strategic planning: Strategist + Analyst + Skeptic + Implementer Product decisions: User Advocate + Engineer + Designer + Business Communication: Writer + Editor + Audience Representative + Critic Problem-solving: Divergent Thinker + Convergent Thinker + Historian + Futurist

Each pattern suited different question types. The roles were adjustable — just prompts that could be refined.

The Limitations Acknowledged

The approach wasn’t magic.

“The AI is still one model, even when wearing different hats. It can’t truly simulate a finance person with 20 years of experience — it can only approximate that perspective.”

True expertise, real-world experience, gut instincts built over careers — these couldn’t be prompted into existence.

“Multi-agent is better than single-agent for complex questions. It’s not as good as actual diverse humans with actual diverse experience.”

Derek used the approach when human advisors weren’t available or affordable. For the most important decisions, he still sought real human input.

The Future Vision

Derek saw multi-agent AI as early infrastructure.

“Someday, complex problem-solving will involve coordinated AI teams by default. Different agents specialized for different cognitive functions, orchestrated by systems more sophisticated than ‘four terminal windows.’”

The current state was primitive but functional. Like early databases before SQL, or early web before frameworks. The patterns were emerging. The tooling would catch up.

The Recommendation

For others experimenting with multi-agent approaches:

“Start simple. Two perspectives on one question. See if the diversity helps. Then scale up.”

The complexity wasn’t in running multiple instances — that was just opening more terminals. The complexity was in choosing useful perspectives, synthesizing conflicting outputs, and knowing when the approach was worth the overhead.

“Most questions don’t need four minds. Some questions need ten. The skill is recognizing which is which.”