TL;DR

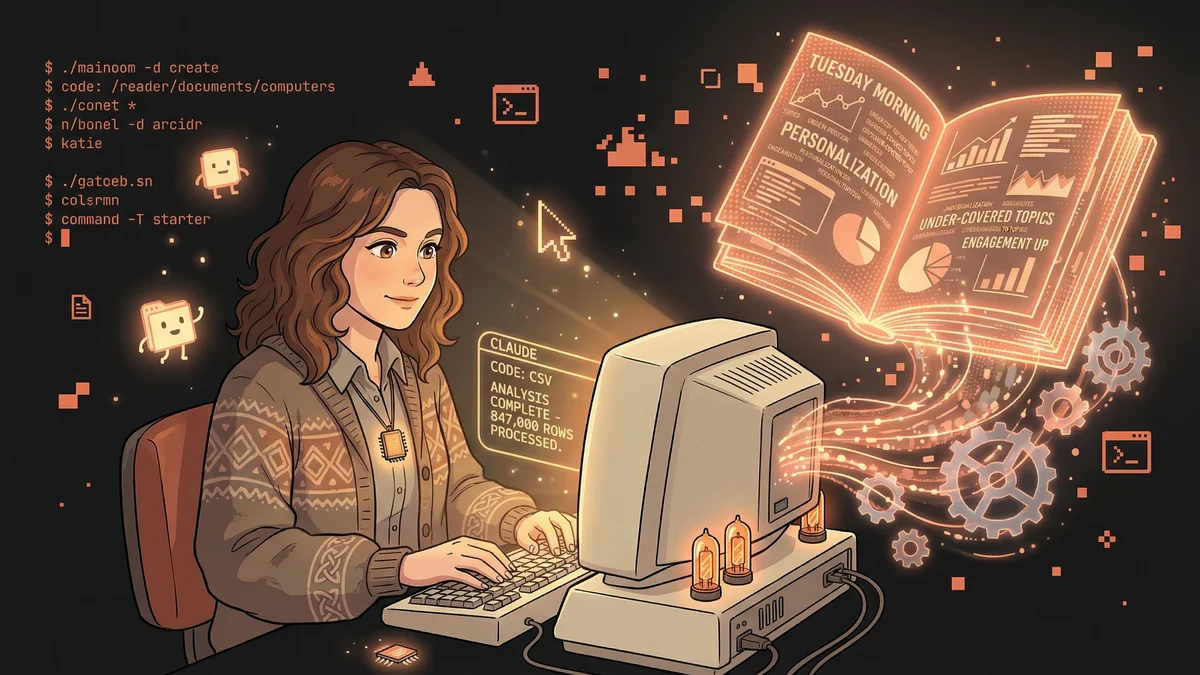

- Processed 847,000-row CSV that cloud AI tools refused with “file too large” errors

- Improved open rates 18% and engagement 24% by acting on data-driven insights

- Used Claude Code locally to bypass upload/context limits of cloud tools

- Best for: Anyone with large datasets stuck behind cloud AI processing limits

- Key lesson: Local AI tools enable complete dataset analysis instead of unreliable samples

An editorial lead processed an 847,000-row CSV file with Claude Code after cloud AI tools refused it, discovering content performance patterns that boosted engagement by 24%.

Katie had the data. She couldn’t use it.

A year’s worth of newsletter performance metrics. Open rates, click rates, engagement scores, reader responses. All sitting in a CSV file.

The file was 847,000 rows. Her cloud AI tool refused to process it.

“I kept getting errors. ‘File too large.’ ‘Context window exceeded.’ ‘Please upload a smaller file.’ But I needed the whole year, not a sample.”

The Analysis She Needed

Katie was the editorial lead for a digital publication. Her job included understanding what content performed best.

Not guessing. Not going by gut. Actually analyzing the data to find patterns.

“Which topics drove opens? What subject lines worked? Did long pieces perform differently than short ones? I had the data to answer these questions. I just couldn’t get to it.”

Cloud-based AI tools had limits. Upload limits. Context limits. Processing limits. Her file exceeded all of them.

The Workaround Attempts

Katie tried chunking the data.

She split the CSV into twelve monthly files. Analyzed each separately. Tried to manually combine the insights.

“It was useless. The patterns I was looking for spanned the whole year. Seasonal trends. Cumulative reader behavior. You can’t see that in monthly chunks.”

She tried sampling. Took every tenth row. Got a smaller file that processed.

“But sampling introduced noise. Statistical significance went out the window. The insights weren’t reliable.”

She tried hiring a data analyst. Got quotes for $3,000+ for what she considered a basic question.

“I just wanted to know what was working. Not build a dashboard. Not create a machine learning model. Just: what patterns exist in this data?”

The Local Solution

A colleague mentioned Claude Code. “It runs on your computer. No upload limits.”

Katie was skeptical. “I’d heard it was for developers. I wasn’t a developer. I just had a CSV file I couldn’t process.”

But desperation breeds experimentation. She installed Claude Code. Pointed it at her data file.

“I asked: ‘Read this entire CSV. Tell me what patterns you find in content performance.’”

No error messages. No file too large. Claude started reading.

The First Analysis

Claude processed all 847,000 rows. Took about ten minutes. Then reported back.

“Your highest-performing content shares these characteristics: subject lines with numbers, publication on Tuesday mornings, topics related to X and Y, length between 800-1200 words.”

Katie stared at the screen. “That was… it? That was what I’d been trying to figure out for months?”

The analysis continued. Open rates by day of week. Engagement correlation with topic categories. Reader response patterns over time.

“All the questions I had, answered in one session. No chunking. No sampling. No $3,000 analyst.”

The Deep Dives

Initial patterns led to follow-up questions.

“You mentioned Tuesday mornings perform best. Is that consistent across all topics, or does it vary?”

Claude drilled down. Tuesday worked best for business content. Thursday was actually better for personal development topics. Friday afternoon — which Katie had avoided — performed surprisingly well for entertainment pieces.

“I’d been publishing everything on a fixed schedule because I assumed consistency mattered. The data showed topic-specific timing mattered more.”

Each answer generated new questions. Each question got answered from the same data file, without re-uploading, without hitting limits.

The Subject Line Laboratory

Katie focused on what she could control: subject lines.

“Show me all subject lines with above-average open rates. What patterns do they share?”

Claude identified multiple patterns:

- Questions outperformed statements

- Numbers in subject lines added 12% to open rates

- Personalization (“You” vs. “One”) mattered

- Curiosity gaps worked, but only if the content delivered

“I had intuitions about some of this. Now I had data. The curiosity gap thing surprised me — we’d overdone it, and readers were catching on.”

The Content Audit

Beyond performance, Katie wanted to understand content gaps.

“Based on topic performance and frequency, what are we over-covering and under-covering?”

Claude analyzed the intersection of frequency (how often we publish on X) and performance (how well X performs).

Over-covered: Industry news (high frequency, mediocre performance) Under-covered: Personal stories (low frequency, high performance)

“We’d been chasing ‘what’s timely’ instead of ‘what resonates.’ The data was clear: readers wanted more depth, less news summary.”

The Reader Segmentation

The CSV included reader engagement levels. Katie asked Claude to segment.

“What content works best for our most engaged readers versus casual readers?”

The segments wanted different things.

Most engaged: Deep dives, data-heavy pieces, contrarian takes Casual readers: Practical tips, how-tos, quick reads

“I realized we’d been optimizing for casual readers because they were the majority. But our most engaged readers — who shared content, paid for subscriptions — wanted something different.”

The Competitive Insight

Katie added competitor performance data to the analysis.

“Compare our content performance to these industry benchmarks.”

Claude identified where the publication over-performed (practical content) and under-performed (thought leadership). The gaps weren’t where Katie expected.

“I thought we were weak on news analysis. Turns out we were weak on forward-looking prediction pieces. Completely different gap than I assumed.”

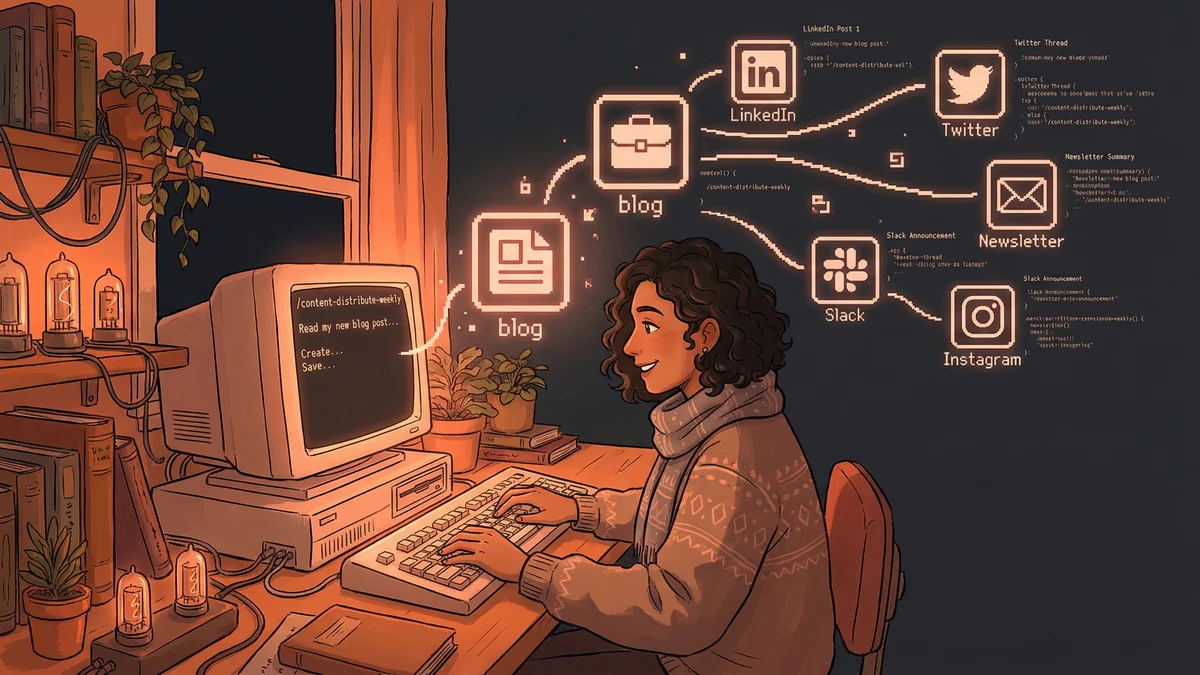

The Strategic Shift

The analysis led to concrete changes.

Publishing schedule adjusted by topic type. Subject line templates created based on high-performers. Content mix shifted toward under-covered high-performers. Separate content tracks for engaged vs. casual readers.

“Three months after implementing the changes, our metrics improved measurably. Open rates up 18%. Engagement up 24%. Not because we worked harder. Because we worked smarter.”

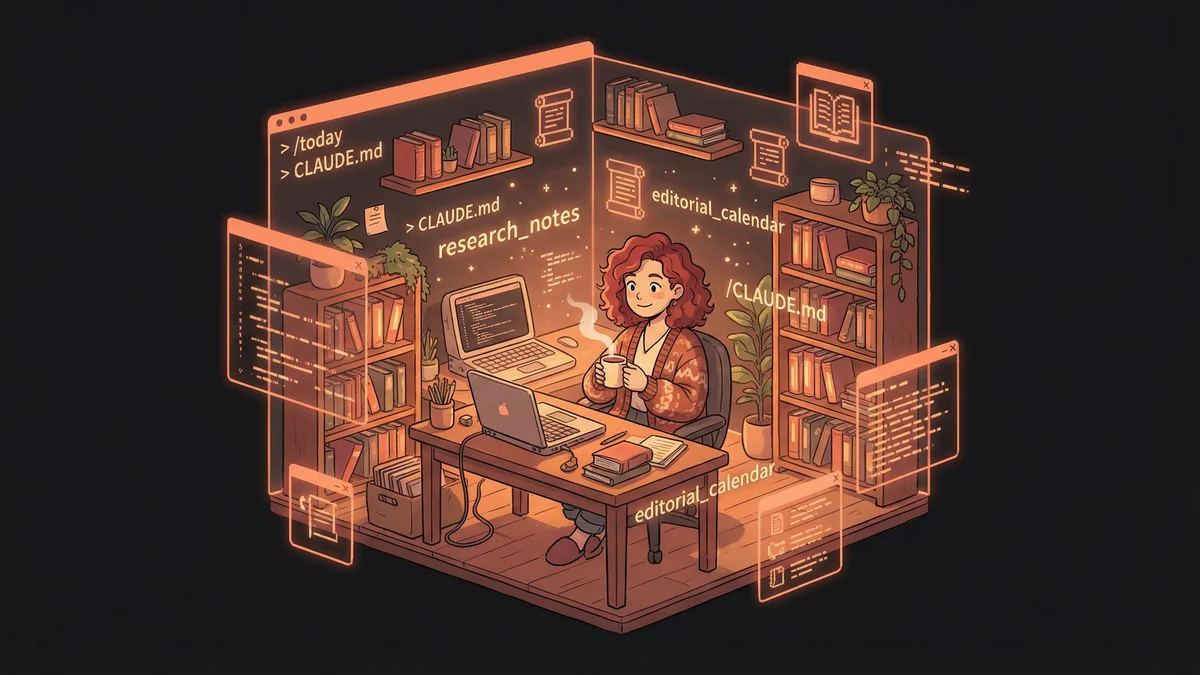

The Ongoing Practice

Katie made data analysis a monthly habit.

New performance data accumulated. Monthly Claude sessions to find emerging patterns. Quarterly reviews to adjust strategy.

“The data keeps teaching us. Reader preferences shift. What worked last quarter might not work next quarter. But now I can actually see the shifts.”

The 847,000-row file was now one of many. Years of data, accessible, analyzable.

The Methodology Note

Katie emphasized data interpretation matters.

“Claude found patterns. I had to determine which patterns were actionable and which were noise. The tool didn’t make editorial decisions for me.”

Correlation wasn’t causation. Tuesday might perform well because of what they published on Tuesdays, not the day itself. Katie designed experiments to validate insights before acting on them.

“AI found the patterns. Human judgment evaluated the patterns. Both necessary.”

The Advice for Data-Stuck Teams

“If you’re sitting on data you can’t analyze because tools have limits, try Claude Code. Local processing means your file size is only limited by your computer’s memory.”

The barrier wasn’t skill or budget. It was artificial limits on cloud tools.

“I’m not a data scientist. I asked questions in plain English and got answers. The hard part was having good questions, not technical capability.”