TL;DR

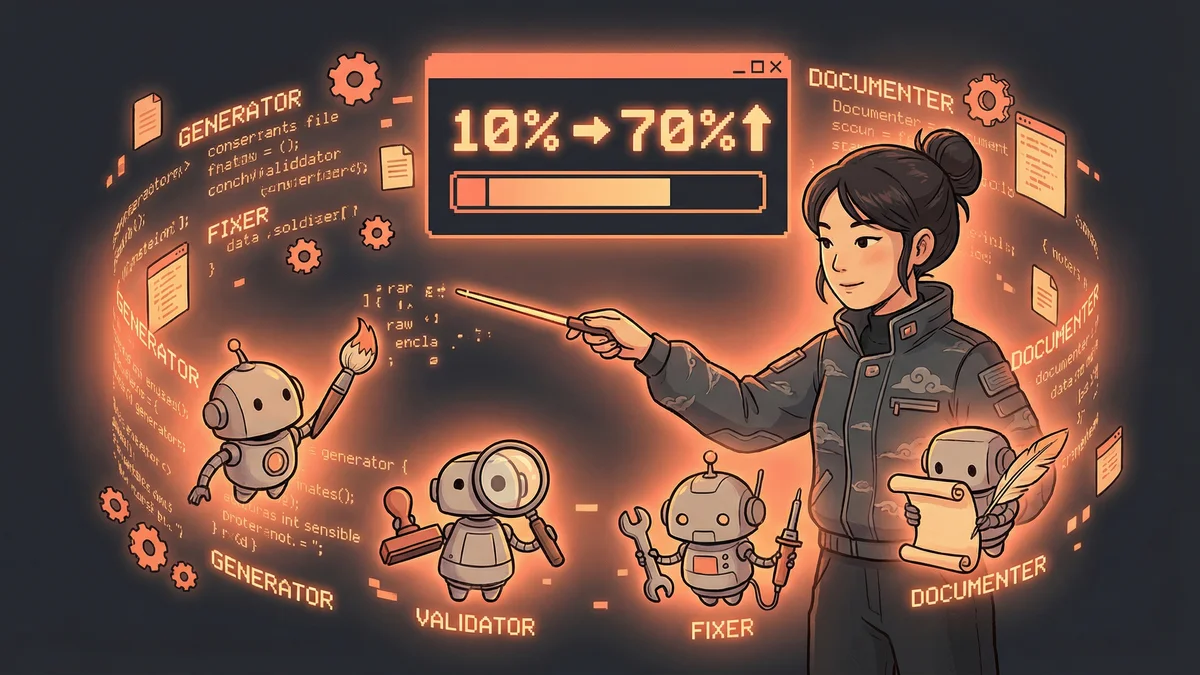

- PwC increased code generation accuracy from 10% to 70% for proprietary languages

- Used CrewAI multi-agent framework with specialized generator, validator, and fixer agents

- Human-in-the-loop checkpoints prevented AI fixes from going off track

- Best for: Enterprise teams with proprietary languages or domain-specific coding needs

- Key lesson: Specialized agents in coordination beat single brilliant generalist models

PwC solved their AI coding problem by abandoning single-model approaches and building a multi-agent crew that improved accuracy from 10% to 70%.

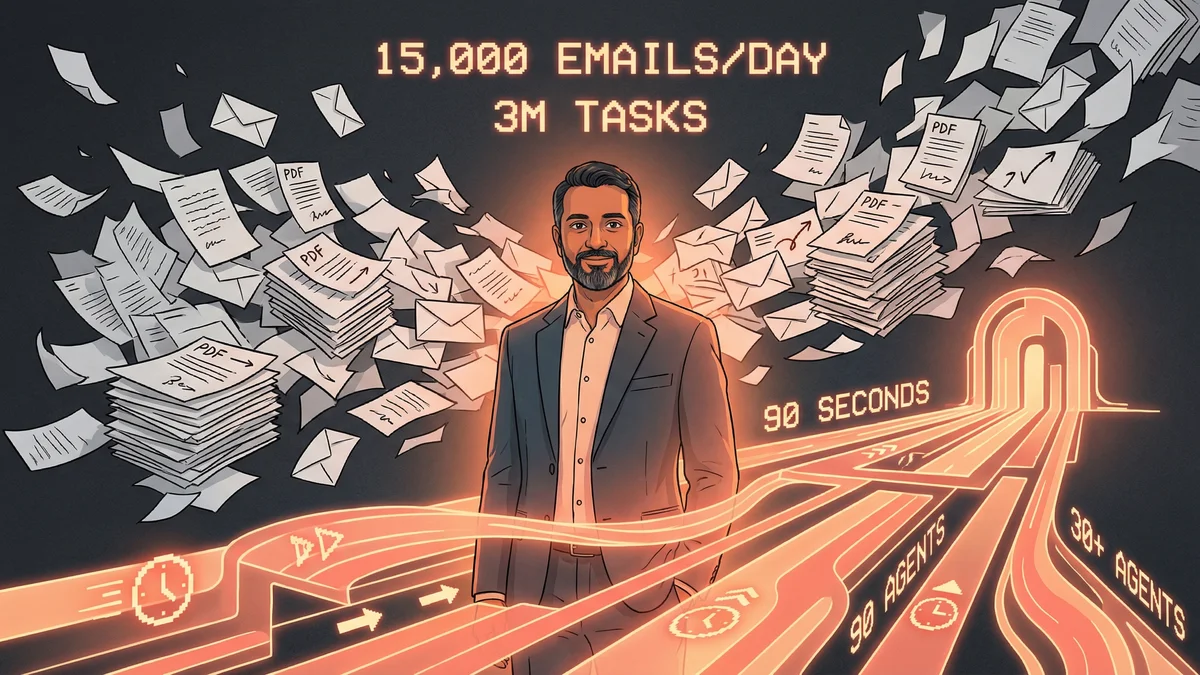

PwC had a code generation problem.

The global consulting firm used proprietary programming languages for internal tools. Languages their clients didn’t know. Languages the AI models hadn’t trained on.

“Off-the-shelf AI coding tools were useless. They could write Python beautifully. Our proprietary DSL? Ten percent accuracy. Worse than useless—it was confidently wrong.”

Ten percent. One in ten code suggestions was actually correct. The other nine ranged from subtly broken to obviously garbage.

The first failure

PwC’s initial GenAI prototypes followed the standard playbook: prompt engineering, fine-tuning, retrieval augmentation.

None of it worked.

“We threw every technique at the problem. Better prompts. More examples. Domain-specific training data. The model kept hallucinating syntax that looked plausible but didn’t compile.”

The fundamental issue: single-model AI couldn’t reliably generate, validate, and fix code in an unfamiliar language. It needed to do all three, but couldn’t manage any consistently.

“Users lost trust fast. If an AI is wrong 90% of the time, people stop using it. We needed something radically different.”

The crew approach

PwC rebuilt their system using CrewAI—a framework for multi-agent orchestration.

Instead of one AI doing everything, specialized agents formed a crew:

The Generator writes initial code based on requirements. Its job is creativity, not correctness.

The Validator compiles and tests what the Generator produces. Its job is catching failures.

The Fixer receives error reports from the Validator and revises the code. Its job is iteration.

The Documenter produces explanations once working code exists. Its job is human communication.

Each agent does one thing well. The crew coordinates the workflow.

The human loop

Here’s what separated PwC’s implementation from failed prototypes: real-time human feedback.

“Early versions generated code, validated it, fixed it—all automatically. But the fixes often went sideways. The AI would ‘fix’ a syntax error by removing the feature entirely.”

The revised architecture included human consultants at key checkpoints. When the Validator flagged issues, humans could redirect the Fixer before it went off track.

“AI handles the heavy lifting. Humans handle the judgment calls. The combination works. Neither alone does.”

The result: 10% to 70%

After implementing the crew architecture with human checkpoints, code generation accuracy jumped from 10% to 70%.

Seven times better. Not perfect, but transformationally useful.

“At 10%, AI coding was a curiosity. At 70%, it’s a tool. Our consultants started actually using it because it actually helped.”

The remaining 30% still required human intervention. But 70% correct code with 30% requiring fixes beats 10% correct code with 90% requiring rewrites.

What the crew teaches

PwC’s experience reveals something about enterprise AI that single-model deployments miss:

Specialization beats generalization. One AI trying to generate, validate, and fix produces mediocre results in all three. Three specialized agents produce excellent results in coordination.

Validation is mandatory. AI that generates without validating generates confidently wrong output. The Validator agent catches failures before humans see them.

Iteration requires architecture. Fixing broken code isn’t just running the same prompt again. The Fixer agent has different instructions, different context, different capabilities.

Humans aren’t optional. The crew works because humans provide judgment the agents can’t. Removing humans to “fully automate” degraded results.

The multi-agent future

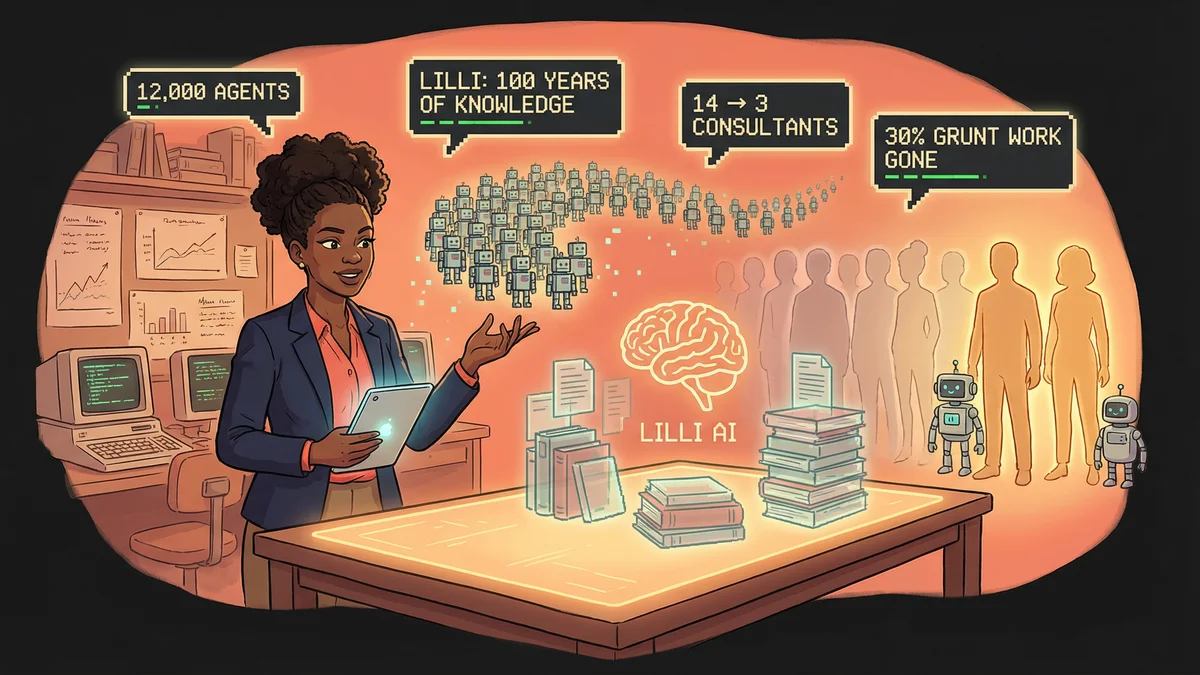

PwC’s success with CrewAI reflects a broader trend. 72% of enterprise AI projects in 2025 involve multi-agent architectures—up from 23% in 2024.

The pattern is clear: complex workflows benefit from agent specialization more than model capability. A crew of focused agents outperforms a single brilliant generalist.

“We stopped asking ‘which AI model is best?’ and started asking ‘how do multiple AI models work together?’ That reframing changed everything.”

The code crew isn’t just PwC’s solution. It’s a template for enterprise AI that actually works.