TL;DR

- Autonomous loops let Claude iterate through problems until success—no human babysitting between attempts

- One engineer fixed 17 test failures overnight while sleeping, saving 10+ hours weekly on iterative tasks

- Key tools: Ralph Wiggum plugin, configurable iteration limits, checkpoint saves, escalation triggers

- Best for: Test suite fixes, documentation sync, config migrations, data cleanup—anything with clear success criteria

- Critical rule: Always set maximum iterations and change limits to prevent runaway automation

Claude Code autonomous loops transform single-shot AI interactions into persistent problem-solvers that diagnose, attempt, evaluate, and retry until they succeed.

Ryan’s test suite was failing. Seventeen errors across twelve files.

Traditional approach: fix one error, run tests, see what broke next, repeat. A cycle that could take hours when errors cascaded.

“I’d fix something, break something else, fix that, break two more things. Whack-a-mole with code.”

He wondered: what if Claude could just keep going until everything passed?

The Loop Concept

Most AI interactions are single-shot. Ask a question, get an answer. Describe a task, receive output.

But some problems require iteration. Try something. Check results. Adjust. Try again.

“I didn’t want to babysit the process. I wanted to set the goal and let Claude iterate until it succeeded.”

The concept: autonomous loops. Claude attempts a task, evaluates success, and keeps trying until done — without human intervention between attempts.

The First Experiment

Ryan set up a simple test case.

The goal: fix a failing test. Not one interaction — a loop that would keep attempting until the test passed.

“I said: ‘This test is failing. Keep analyzing and fixing until it passes. Run the test after each change. If it still fails, diagnose why and try again.’”

He stepped away. Made coffee. Checked back twenty minutes later.

The test was passing.

Claude had made four attempts. First fix addressed the obvious error but exposed a hidden issue. Second fix handled that but broke a dependency. Third fix resolved the dependency. Fourth fix cleaned up an edge case.

“Each iteration built on learning from the previous failure. Claude diagnosed, attempted, evaluated, repeated.”

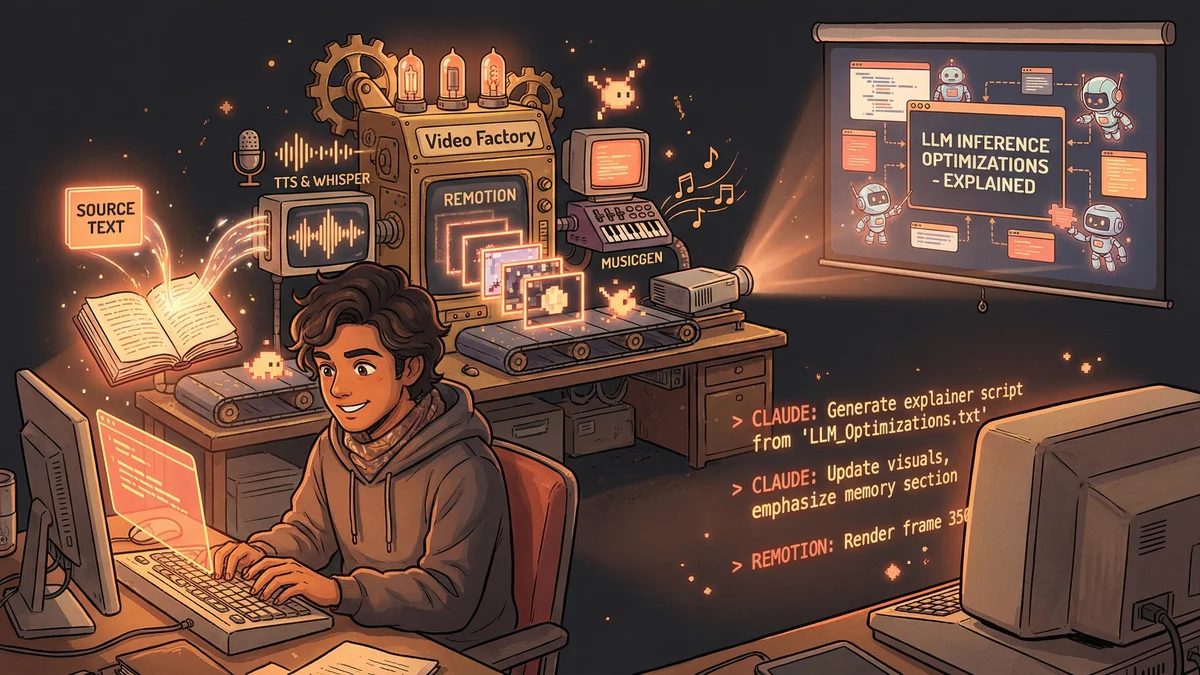

The Ralph Wiggum Discovery

Ryan found a Claude Code plugin that formalized this pattern.

The community called it Ralph Wiggum — partly as a joke, partly because it would persistently try things until something worked, like the Simpsons character’s oblivious determination.

The plugin enabled configurable autonomous loops:

- Set a goal

- Define success criteria

- Set maximum iterations

- Let it run

“With Ralph Wiggum configured, I could say ‘fix all failing tests’ and walk away. Claude would iterate through each failure, making attempts until the entire suite went green.”

The Application to Bigger Problems

Ryan expanded the autonomous approach beyond simple test fixes.

Documentation synchronization: “Compare the code to the docs. Fix any discrepancies. Keep going until they match completely.”

Claude iterated: fixed one doc section, found another outdated reference, updated that, discovered a third issue, corrected it. The loop continued until full synchronization.

Configuration migration: “Migrate this config from format A to format B. Run validation after each change. Keep iterating until validation passes.”

Multiple iterations. Each caught different issues. The final output was clean.

Data cleanup: “Process this CSV. Fix formatting errors. Validate each row. Re-process any failures until all rows are valid.”

Some rows needed three passes. Others passed on first try. The autonomous loop handled variance automatically.

The Iteration Intelligence

The key wasn’t blind repetition. It was intelligent adaptation.

“Each iteration, Claude remembered what it had tried. It didn’t repeat failed approaches. It built a model of the problem space through failure.”

Iteration one: try the obvious fix Iteration two: the obvious fix failed, try the less obvious cause Iteration three: that revealed a deeper issue, address it Iteration four: clean up side effects from previous fixes

The loop wasn’t stupid persistence. It was systematic exploration.

The Guardrails

Unlimited autonomy was dangerous.

Ryan implemented constraints:

Maximum iterations: If Claude hadn’t succeeded in 10 attempts, stop and report what it had learned. Some problems weren’t fixable through iteration.

Change limits: Each iteration could only modify a certain number of files. Prevents cascading changes that make things worse.

Checkpoint saves: State saved between iterations. If something went terribly wrong, rollback was possible.

Escalation triggers: Certain error types would pause the loop and ask for human guidance rather than continuing blindly.

“Autonomy with guardrails. The loop could run independently, but within boundaries.”

The Debug Scenario

The pattern proved most valuable for debugging.

Traditional debugging: run program, see error, guess at cause, make change, run again, see if fixed or if new error appeared. Human-in-the-loop on every cycle.

Autonomous debugging: “This program crashes with error X. Diagnose and fix. Keep iterating until it runs successfully. Report what you tried.”

Ryan gave Claude a stubborn memory leak. Six iterations to trace the cause through three function calls, identify the allocation without deallocation, implement the fix, and verify resolution.

“Six iterations would have taken me half a day with context-switching and other interruptions. Claude did it in thirty minutes while I was in a meeting.”

The Cost Consideration

Autonomous loops used more tokens.

Each iteration meant more processing. Ten-iteration loops cost roughly ten times a single interaction.

“I reserved autonomous mode for problems worth the compute. Simple fixes weren’t worth the overhead. Complex, iterative problems were.”

The calculus: autonomous was expensive per minute, but cheap per human hour saved.

The Night Runs

Ryan started letting autonomous tasks run overnight.

“I’d set up a complex refactoring goal at 6 PM. By 8 AM, Claude had iterated through twelve files, made thirty-seven changes, and everything was passing.”

The computer worked while he slept. Morning reviews instead of morning execution.

“It felt like having a junior developer who worked 24/7 on tedious tasks without complaining.”

The Failure Cases

Not everything succeeded.

Some problems had no good solution. The loop would hit max iterations and report: “Tried X approaches. None succeeded. Here’s what I learned about why this might be unsolvable without human architecture decisions.”

Some iterations made things worse before making them better. The guardrails helped, but occasionally Ryan had to rollback and restart with better constraints.

“Autonomous doesn’t mean infallible. It means tireless within its capability limits.”

The Philosophical Shift

The experience changed how Ryan thought about AI assistance.

“Most people think of AI as giving answers. Autonomous loops are about AI doing work — iterative, effortful work that adjusts based on feedback.”

The shift: from AI as oracle to AI as worker. Not “tell me the answer” but “work on this until it’s done.”

The Community Buzz

Others had discovered similar patterns.

Online discussions called it “the closest thing to AGI we have” — not because it was truly general intelligence, but because the autonomous persistence felt qualitatively different from single-shot interactions.

“When Claude keeps trying, learns from failure, and eventually succeeds without human intervention… it feels like something has changed. Even if technically it’s just a loop.”

The Current State

Ryan now used autonomous loops regularly.

Test suite maintenance. Documentation syncing. Configuration migrations. Data cleaning. Any task that required iteration and could be evaluated programmatically.

“The key is clear success criteria. Claude needs to know when it’s done. If success is vague, the loop doesn’t know when to stop.”

He estimated autonomous mode saved him ten hours weekly on tasks he previously did manually or avoided because they were too tedious.

The Future Projection

Ryan saw autonomous agents as the trajectory.

“Right now, I configure loops manually. In the future, the AI will recognize when autonomous iteration is appropriate and switch modes automatically.”

The current state was powerful but primitive. Explicit loop setup. Manual guardrails. Human-defined success criteria.

“Someday, you’ll just say ‘fix this’ and the AI will decide whether to try once or iterate ten times based on problem characteristics. We’re not there yet, but I can see it from here.”