TL;DR

- 37 specialized AI agents organized into 6 swarms built an entire startup autonomously

- RARV (Reason-Act-Reflect-Verify) cycles produce 2-3x quality improvement through self-verification

- Triple reviewer pattern runs code, business logic, and security reviews in parallel

- Best for: Organizations needing extreme speed for prototyping, market exploration, or crisis response

- Key lesson: Specialization beats generalization; meta-orchestration is critical for agent coordination

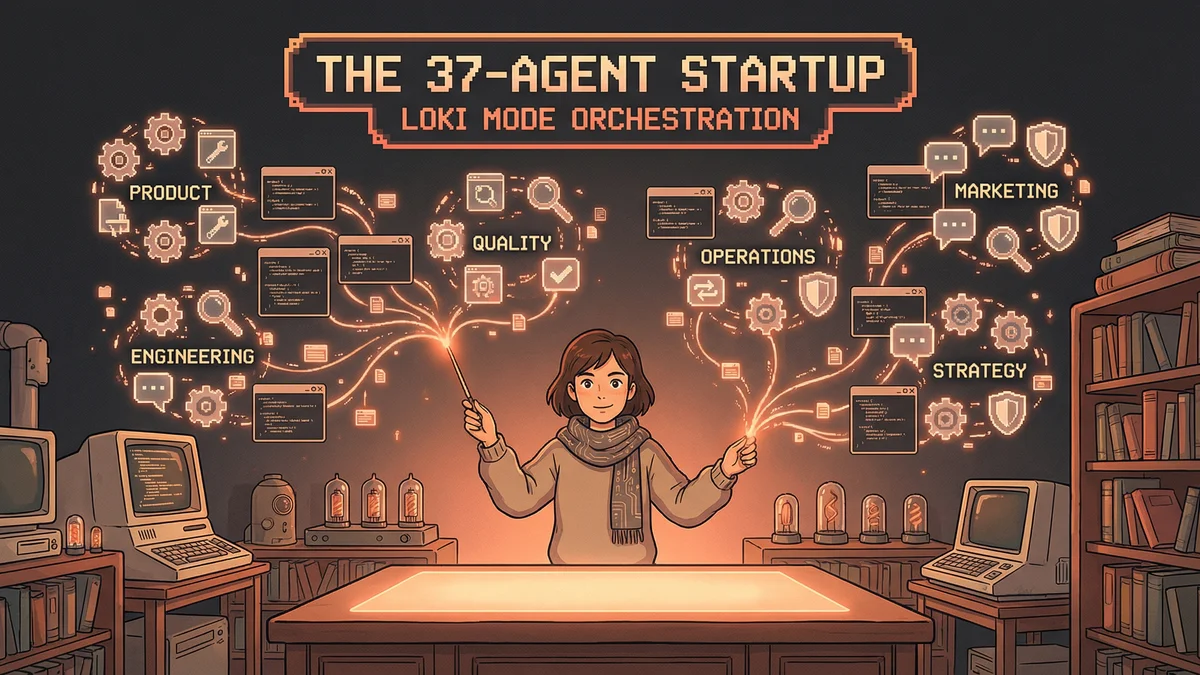

A multi-agent system called Loki Mode demonstrates that 37 specialized AI agents organized into coordinated swarms can autonomously build a complete startup including product, engineering, marketing, and operations.

The idea sounded impossible.

Thirty-seven AI agents. Six coordinated swarms. Building a startup — not just code, but product, marketing, operations — autonomously.

Loki Mode wasn’t a thought experiment. It was a working system.

“We wanted to see how far autonomous orchestration could go. Turns out, pretty far.”

The Swarm Architecture

Loki Mode organized agents into specialized swarms.

Product Swarm: Agents focused on requirements, specifications, feature definitions.

Engineering Swarm: Agents building code — frontend, backend, infrastructure.

Quality Swarm: Agents testing, reviewing, validating.

Operations Swarm: Agents handling deployment, monitoring, maintenance.

Marketing Swarm: Agents creating content, messaging, positioning.

Strategy Swarm: Agents analyzing market, competition, opportunities.

Six swarms. Thirty-seven specialized agents total. Each with defined responsibilities.

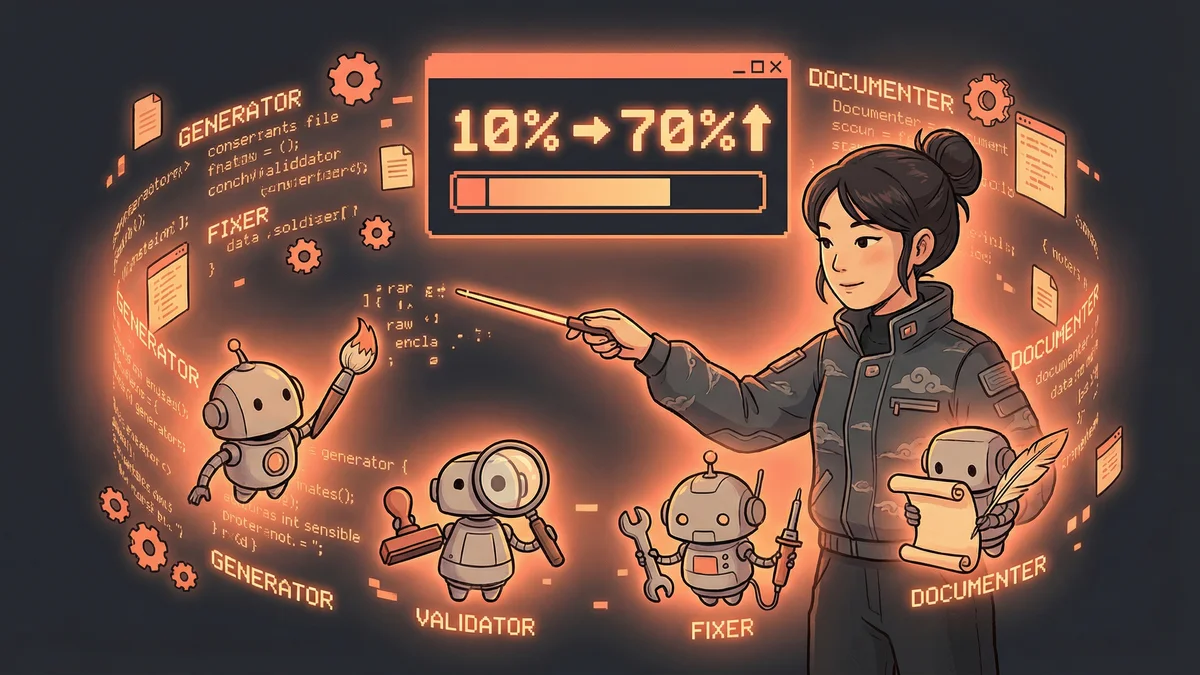

The RARV Cycle

What made agents effective wasn’t just parallelism. It was self-improvement.

Loki Mode implemented RARV cycles: Reason-Act-Reflect-Verify.

Reason: Before taking action, the agent reasons about the task. What’s needed? What are the risks? What’s the approach?

Act: Execute the planned action. Write the code. Create the content. Make the change.

Reflect: After acting, reflect on what happened. Did it work? What could be better? What was learned?

Verify: Independently verify the result. Check against requirements. Test for correctness.

The cycle repeated. Each iteration improved on the previous.

The Quality Multiplier

RARV cycles produced measurable improvement.

“We saw 2-3x quality improvement through self-verification. Agents caught their own mistakes before they propagated.”

Traditional single-pass generation produced output with errors. RARV cycles caught and corrected errors in the same session.

The Triple Reviewer Pattern

Code review happened in parallel, not sequence.

When an engineering agent completed code, three reviewer agents examined it simultaneously:

Code Reviewer: Technical quality. Style. Patterns. Correctness.

Business Logic Reviewer: Does the code implement what was specified? Are edge cases handled?

Security Reviewer: Vulnerabilities. Exposures. Attack surfaces.

Three perspectives. Three agents. Running in parallel.

“We got comprehensive review in the time it normally takes for one review. And the perspectives caught different issues.”

The Orchestration Layer

With 37 agents, coordination was critical.

A meta-orchestrator managed the swarms. It understood dependencies. Knew which agents needed to wait for others. Handled failures and retries.

“You can’t just start 37 agents and hope they coordinate. You need explicit orchestration.”

The orchestrator used queues, locks, and dependency graphs. Enterprise-grade infrastructure for enterprise-grade autonomy.

The Startup Pipeline

The system could instantiate a startup concept from scratch.

Phase 1 — Strategy: Strategy swarm analyzes the market. Identifies opportunity. Defines positioning.

Phase 2 — Product: Product swarm translates strategy into specifications. Features. Requirements. User stories.

Phase 3 — Engineering: Engineering swarm builds the product. Frontend. Backend. Infrastructure.

Phase 4 — Quality: Quality swarm tests everything. Finds bugs. Verifies specifications are met.

Phase 5 — Operations: Operations swarm deploys. Sets up monitoring. Prepares for launch.

Phase 6 — Marketing: Marketing swarm creates launch content. Messaging. Positioning. Outreach.

End-to-end startup creation. From concept to deployed product.

The Token Economics

Thirty-seven agents consumed significant resources.

“This isn’t cheap. You’re paying for 37 simultaneous thought processes.”

But compared to hiring 37 employees? The economics still favored automation for many tasks.

“The question isn’t ‘is this expensive?’ It’s ‘is this cheaper than the alternative?’”

The Human Role

Loki Mode didn’t eliminate humans. It changed what they did.

Humans provided:

- Initial direction and constraints

- High-level strategic decisions

- Exception handling when agents got stuck

- Final approval before deployment

“Think of it as managing a company of AI employees. You’re the CEO, not the worker.”

The Failure Recovery

With 37 agents, some would fail.

The orchestrator handled failures gracefully. Retry logic. Fallback strategies. Escalation to human review when retries failed.

“Individual agent failures don’t stop the system. The orchestrator routes around problems.”

Resilience was designed in. Not every agent needed to succeed for the system to progress.

The Coordination Protocols

Agents communicated through defined protocols.

Shared memory stored state that multiple agents needed. Task queues distributed work. Status channels reported progress.

“It’s like distributed systems engineering, but the nodes are AI agents instead of servers.”

The same patterns that make microservices work made agent swarms work.

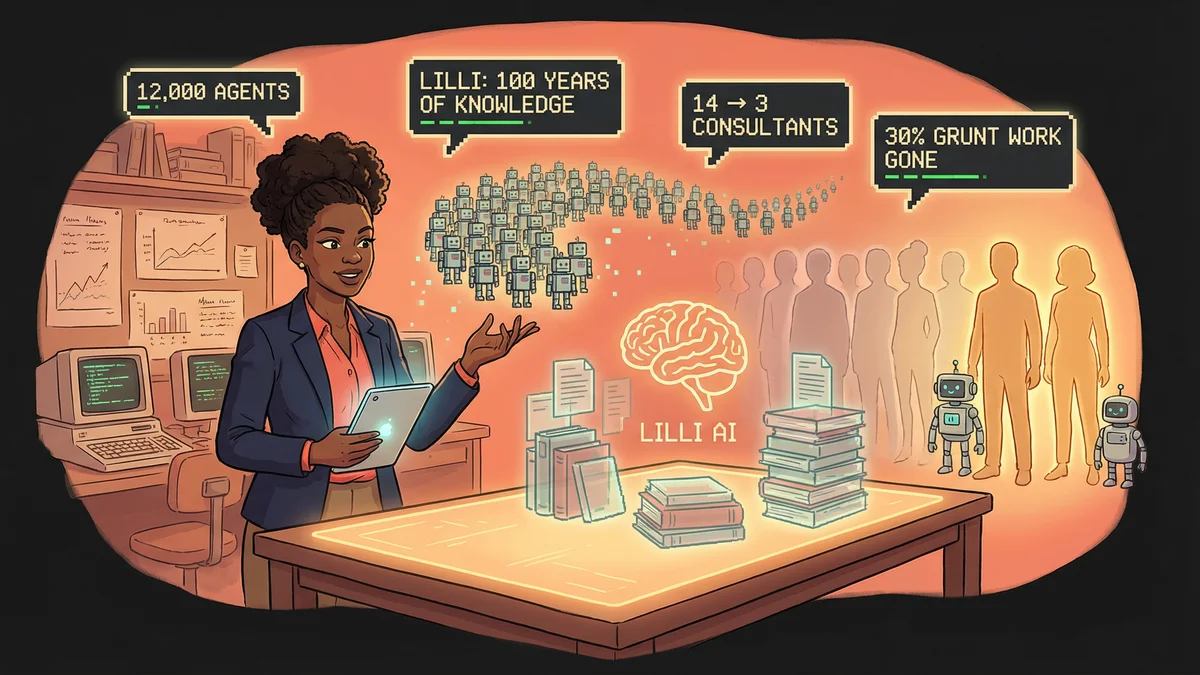

The Specialization Advantage

Generalist agents struggled with deep expertise.

Loki Mode’s agents were specialists. The frontend agent knew React patterns. The security agent knew OWASP. The marketing agent knew content strategy.

“Specialization allowed each agent to be genuinely good at its domain. You don’t ask the security agent to write marketing copy.”

The combination of specialists exceeded what any generalist could achieve.

The Emergence Observation

Sometimes swarm behavior surprised.

“We’d see the marketing swarm adjust messaging based on what the engineering swarm built. They weren’t explicitly coordinated — they just read each other’s outputs.”

Emergent behavior arose from agents responding to shared context. Not programmed. Spontaneous.

The Limitations Reality

The system had boundaries.

Novel problems without precedent stumped agents. Truly creative decisions required human input. High-stakes choices needed human approval.

“This is an automation system, not a replacement for human judgment. It handles the 80% that’s automatable. Humans handle the 20% that isn’t.”

The Speed Factor

The parallelism was powerful.

“Tasks that would be sequential with a human team — build, then test, then deploy, then market — happened simultaneously.”

The startup pipeline that might take months with a small team compressed into days.

The Use Cases Emerging

Organizations began exploring specific applications:

Rapid prototyping: Validate ideas quickly by building functioning prototypes.

Market exploration: Launch multiple variants simultaneously to test assumptions.

Crisis response: Spin up solutions rapidly when speed matters more than perfection.

“It’s not about replacing normal product development. It’s about having a capability when you need extreme speed.”

The Governance Question

With autonomous agent swarms, governance became important.

“Who’s responsible when 37 agents make a collective decision? What are the guardrails?”

Loki Mode included safety constraints. Approval gates. Human checkpoints. The automation had boundaries.

The Current State

Loki Mode continued evolving.

More agents. More swarms. More coordination intelligence. The system learned from each deployment.

“Thirty-seven agents was a milestone. Not a ceiling.”

The architecture supported scaling. More agents for larger problems. Fewer for simpler ones. Adaptive to the task.

The Implications

The 37-agent startup represented a threshold.

“We’ve crossed from ‘AI assists’ to ‘AI operates.’ Not just helping humans — running entire workflows.”

The implications for organizations: capabilities that previously required teams could be instantiated on demand.

“You don’t need to hire 37 people. You need to orchestrate 37 agents.”