TL;DR

- Green (safe): Brainstorming, grammar checking, concept explanations, study aids, research discovery

- Amber (context-dependent): Outlining, paraphrasing your work, getting feedback - disclose these uses

- Red (not acceptable): Submitting AI text as your own, fabricating citations, hiding AI use when asked

- Best for: Students unsure what AI use is allowed, anyone wanting clear ethical boundaries

- Key test: “Could I explain any part of this work in office hours?” If yes, proceed. If no, you’ve gone too far.

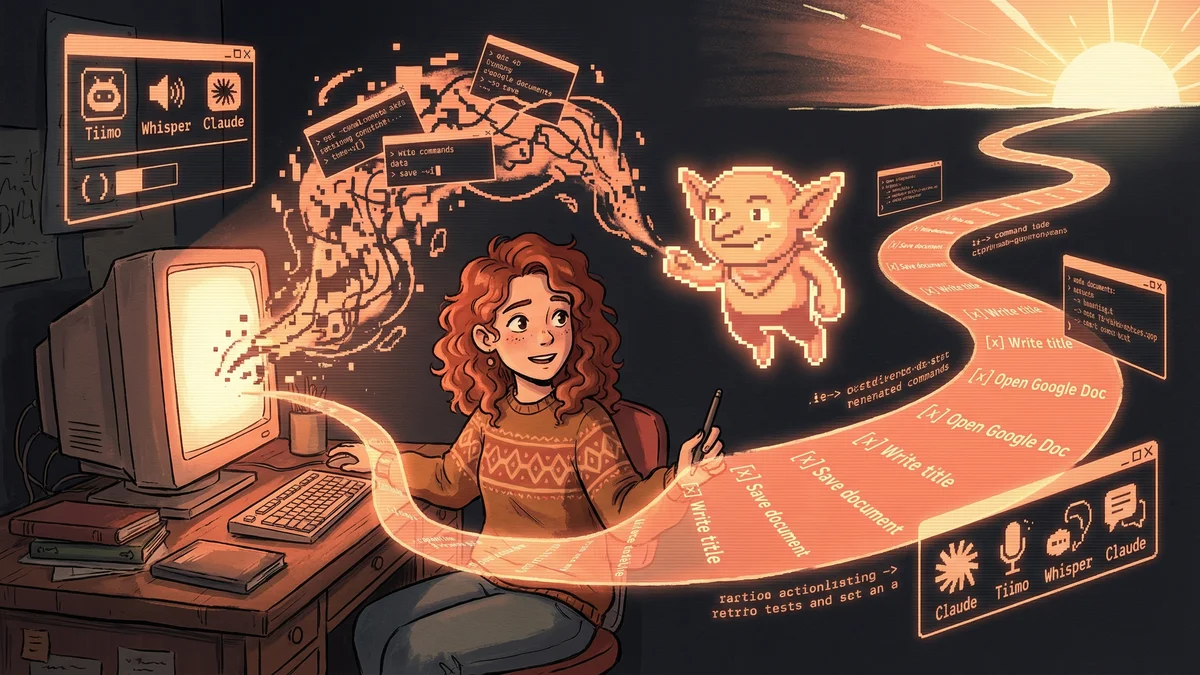

The traffic light framework gives students clear guidelines for ethical AI use in coursework: green uses are generally safe, amber requires disclosure, and red crosses into academic dishonesty.

Jordan had a question that kept her up at night:

Am I cheating?

She used ChatGPT to brainstorm essay topics. She used Grammarly to fix her grammar. She used Claude to explain concepts she didn’t understand from lecture.

Some professors said AI was fine. Some said it was forbidden. Most said nothing at all, leaving students to guess.

Jordan wasn’t trying to cheat. She was trying to learn efficiently. But the line between “using tools” and “academic dishonesty” felt impossibly blurry.

Then she found the traffic light framework.

Red, Amber, Green

The most useful way to think about AI ethics in education:

Green - Generally Acceptable

These uses are almost always fine:

Brainstorming and idea generation Using AI to generate topic ideas, outline possibilities, or research angles. You’re using it for inspiration, not output.

Grammar and clarity checking Running text through Grammarly, Hemingway, or asking AI “fix grammar issues in this paragraph.” This is proofreading assistance, not writing for you.

Concept explanation Asking AI to explain something you don’t understand. “What is supply elasticity?” or “Explain this calculus concept in simpler terms.” This is tutoring.

Research discovery Using tools like Elicit, Perplexity, or Semantic Scholar to find sources. This is enhanced searching, not different from using a library database.

Study aids Having AI generate flashcards, practice questions, or summaries of YOUR notes. This is study preparation.

Translation and accessibility Using AI for text-to-speech, translation to understand a source, or simplifying language. This is accessibility support.

Amber - Permitted with Disclosure

These uses are context-dependent. Often okay if acknowledged, problematic if hidden:

Outlining assistance Having AI help structure an essay or paper. Many instructors consider this acceptable if you do the actual writing. Others want you to develop organizational skills yourself.

Paraphrasing your own work Using AI to rephrase sentences you wrote for clarity. Fine if it’s your ideas being polished. Problematic if you don’t understand the polished version.

Feedback before submission Asking AI “what’s weak about this paragraph?” and then improving it. Usually acceptable but check course policy.

Code debugging help For CS assignments: using AI to find bugs in code you wrote. Often fine for learning, but some courses want you to debug without help.

Foreign language checking Using AI to verify your language writing. Some instructors allow this; others want to assess your raw ability.

The key: If it’s amber, disclose it. A simple note: “I used ChatGPT to help organize my outline” or “Grammar checked with Grammarly AI” protects you and respects the instructor.

Red - Generally Not Acceptable

These uses cross the line into academic dishonesty at most institutions:

Submitting AI-generated text as your own Having AI write your essay, paper, or assignment and turning it in. This is the equivalent of having someone else do your work.

Using AI during closed/proctored exams If an exam forbids outside resources, AI is an outside resource.

Fabricating citations Using AI to generate realistic-looking but fake references. This is academic fraud.

Bypassing the learning objective If an assignment exists to teach you something and you have AI do that thing, you’ve undermined the purpose.

Hiding AI use when asked directly If an instructor asks whether you used AI and you lie, that’s dishonesty.

The Test That Works

Jordan developed a personal test for any AI use:

“Could I explain this in office hours?”

If a professor asked her about any sentence, concept, or choice in her paper, could she explain it? Could she justify it? Could she show she understood it?

If yes: the AI use was probably appropriate. She used AI as a tool but retained ownership of the work.

If no: she’d gone too far. The AI had produced something she couldn’t have produced or didn’t understand.

This test isn’t about detection. It’s about integrity. The question isn’t “will I get caught?” It’s “is this actually my work?”

Real Scenarios, Worked Through

Scenario 1: The essay outline

Jordan needed to write about climate policy economics. She asked ChatGPT:

“What are some possible angles for an essay on the economic impacts of climate policy?”

It returned six ideas. She picked one and developed it into her thesis.

Verdict: Green. She used AI for brainstorming, then did the thinking and writing. The analysis was hers.

Scenario 2: The struggling paragraph

Jordan wrote a paragraph but it felt clunky. She pasted it into Claude:

“This paragraph is awkward. Suggest improvements while keeping the same meaning.”

Claude offered a rewrite. Jordan took some suggestions, rejected others, and revised.

Verdict: Amber. She was polishing her own ideas. If required, she should disclose that AI assisted with editing.

Scenario 3: The chemistry problem

Jordan couldn’t solve a chemistry equation. She asked ChatGPT to solve it, then copied the answer.

Verdict: Red. She didn’t learn chemistry. The homework was supposed to teach her. She bypassed the learning.

Better approach: Ask ChatGPT to explain the method, then solve it herself. Or use it to check her answer after attempting.

Scenario 4: The literature review

Jordan used Elicit to find papers and generate summaries. She read the abstracts, but not full papers. She cited them anyway.

Verdict: Red. Citing papers you haven’t read is dishonest, regardless of how you found them.

Better approach: Use Elicit for discovery, then actually read the sources you cite - at least the relevant sections.

Scenario 5: The professor asks

Jordan’s professor asked, “Did you use AI for this assignment?”

Jordan used ChatGPT for brainstorming and Grammarly for proofreading.

Correct answer: “Yes - I used ChatGPT to brainstorm topic ideas and Grammarly for grammar checking.” This is honest and shows legitimate use.

Wrong answer: “No.” Even if the use was minor and allowed, lying creates the misconduct.

When Policies Conflict

What if one professor says AI is fine and another says it’s forbidden?

Solution: Treat each course separately.

Write down each instructor’s policy at the start of the semester. When working on an assignment, check that course’s rules - not your general habits.

Some students create a simple reference:

| Course | AI Policy |

|---|---|

| English 101 | AI for brainstorming OK, not for drafting |

| Calc 2 | No AI on problem sets |

| Psych 201 | Full disclosure required, otherwise OK |

| CS 301 | AI debugging OK, AI code generation not OK |

This prevents accidentally violating a policy because you were in “general AI mode.”

What If There’s No Policy?

Many professors haven’t stated an AI policy. What then?

Default to conservative use:

- Green uses are almost certainly fine

- Amber uses should be disclosed or asked about

- Red uses should be avoided unless explicitly permitted

Or ask directly:

“Professor, I want to clarify the AI policy for this course. Is it acceptable to use AI for [specific use]?”

Most instructors appreciate the question. It shows integrity. And their answer protects you.

The Self-Interest Argument

Beyond ethics, there’s a practical reason to use AI responsibly: you’re paying to learn.

If AI does your homework, you learn less. If you learn less, you’re worse at your future job. If you’re worse at your job, you’ve wasted tuition money on a credential without skills.

Jordan thought about this whenever tempted to have AI write something:

“I’m paying $X per credit hour. This assignment is supposed to teach me something. If AI does it, I’ve paid for nothing.”

The goal isn’t to graduate. The goal is to graduate competent.

The Future Argument

Students entering the workforce will use AI tools. That’s almost certain. But here’s what employers actually want:

- People who understand their field (not just people who can prompt)

- People who can verify AI outputs (requires knowing the subject)

- People who know when AI is appropriate (requires judgment)

If you over-rely on AI in school, you might graduate without the foundational knowledge needed to supervise AI in your career.

“I used AI throughout college” is only impressive if you can also demonstrate expertise. Otherwise, you’re just someone who knows how to copy-paste.

Jordan’s Framework Summary

After much trial and error, Jordan settled on these rules:

1. Does the instructor allow it? Check the policy. When in doubt, ask.

2. Am I learning? If AI is teaching me, great. If AI is replacing my learning, stop.

3. Could I explain it? If someone questioned any part of my submission, could I defend it knowledgeably?

4. Am I being transparent? If asked, would I be comfortable describing my AI use?

5. Would I be okay if everyone did this? If all students used AI the way I’m using it, would the course still work? Would grades still be meaningful?

If the answers are yes, proceed. If any answer is no, reconsider.

The Honest Path

Jordan graduated with a 3.6 GPA. She used AI throughout college - for brainstorming, studying, research, grammar checking. She learned to use it skillfully.

She never got caught cheating, because she never cheated.

“The weird thing is, using AI ethically actually taught me more,” she says. “When you can’t just copy an AI essay, you have to understand what makes writing good. When you use AI to explain concepts, you have to engage with the explanations. It’s more work, but better work.”

The traffic lights aren’t about rules. They’re about becoming someone who can stand behind their work.